There’s no doubt generative AI is taking the world by storm. There’s tons of buzz and excitement around it, and we discover new and new ways of utilizing it nearly every day. More and more businesses are investing in Gen-AI-powered solutions (according to MarketsandMarkets, the market value for generative AI is expected to hit 51.8 billion by 2028), some to use it internally and boost their operations, others to be the first ones to release groundbreaking features and products to the market.

But as the excitement and scale of investment grow, also many concerns arise. The more we learn about this fine new technology, the more we realize it comes with certain downsides and risks that need addressing if we want this tech transformation to actually benefit humanity.

One of those big worries, along with data privacy, data security, and IP rights, is the matter of bias in generative AI. Sadly, although Gen AI has been widely used for barely a few months, there are already numerous examples of highly biased AI-generated outputs. And while in the case of data privacy, data security, and IP rights, the solution seems pretty obvious — we “just” need to come up with the right laws and regulations for them — solving the problem of bias doesn’t look obvious at all. And the threat is serious.

For companies adopting generative AI, biased outputs pose a risk of discrimination and can negatively impact their decision-making processes. As we can read in the recent interview for BBC:

“The AI risks are more that people will be discriminated [against], they will not be seen as who they are. If it’s a bank using it to decide whether I can get a mortgage or not, or if it’s social services in your municipality, then you want to make sure that you’re not being discriminated [against] because of your gender or your color or your postal code.”

Margrethe Vestager, Executive Vice President of the European Commission for A Europe Fit for the Digital Age

Additionally, such signs of discrimination stand a big risk of questioning their fairness or equality standards — which, in consequence, can lead to serious PR crises. And it’s the last thing anyone would want when implementing AI! After all, the goal of using this cutting-edge technology is to support businesses’ growth, not threaten their image.

That’s why, in this article, we’ll delve into that problem; we’ll take a look at types of bias in generative AI and try to determine how to prevent or at least manage it.

Where does generative AI’s bias come from?

The answer here is pretty simple and depicts our sad reality: models may be trained on vast amounts of data from various sources, but all those sources come from a biased world. Even though we live in the 21st century — one that’s supposed to be modern and civilized, humanity is still full of harmful stereotypes and discrimination. We still lack diversity and equality in a frighteningly large range of fields — and then AI models come and learn from it. Just like people learn from other people — because, think of it — nobody is born racist or homophobic. On the other hand, generative AI was kind of “born” biased — but only because it had to be fed tons of data to exist and function in the first place.

And it seems like there’s no other way. The technology as we know it allowed specialists to bring generative AI models to life using certain resources. Resources in which biases emerge at every step, even though many try to fight them with all their strength. So it looks like bias in generative AI models was nearly impossible to avoid in the first place. As long as this world exists in its current state and as long as we cannot clear the whole web from all signs of discrimination, AI models will have biases running through their virtual veins.

So the question arises: what should we do? Because obviously, we cannot just accept the current state of matters and turn a blind eye to the signs of stereotyping and discrimination in generative AI’s outputs.

Well, as long as we don’t have any golden solution to just wipe bias from the base models, we can only react to what “is” and do our best to clear stereotypes and discrimination of AI’s system step by step.

A bias born from an oversight

Being aware of the above is crucial for businesses looking into adopting generative AI, as it means they should remember to approach their projects cautiously. Overlooking either signs of bias in existing Gen AI models or certain patterns in datasets they use to train new models (or to fine-tune the existing ones) can lead to unpleasant surprises, just like the one Amazon faced a few years ago.

The giant developed an AI recruitment tool (based on machine learning, not Gen AI, but it doesn’t change anything in this context) to speed up its hiring process. The data used to train the model came from the resumes submitted to the company over the previous 10 years. The problem was that most of the candidates from that period were male, which made the tool “understand” that being a woman is an undesirable trait in the recruitment process. No one noticed that; thus, no one took any steps to ensure the training data included a balanced sample of candidates of all genders. And just like that, although the project never assumed discriminating against anybody, a “simple” oversight during the model training process led this potentially great AI project to failure.

What kind of bias do we see in generative AI?

From what we already observe, generative AI shows various types of bias. According to the above, those are the same types we face in real life, in human relations — meaning gender bias, race bias, class bias, sexual orientation bias, etc.

What does it look like? For example, Gen AI tends to stereotypically associate women as stay-at-home moms rather than working professionals. Or present white people as well-established while dark-skinned as poor or even as criminals. It is also likely to describe a poor person as lazy rather than a hard worker and mention a gay person (rather than a straight person) as an example of promiscuousness.

Those signs occur both in AI-generated texts and visual content, and the sad part is: it’s not even a matter of poorly constructed prompts — the highly discriminatory outputs often come from seemingly innocent requests.

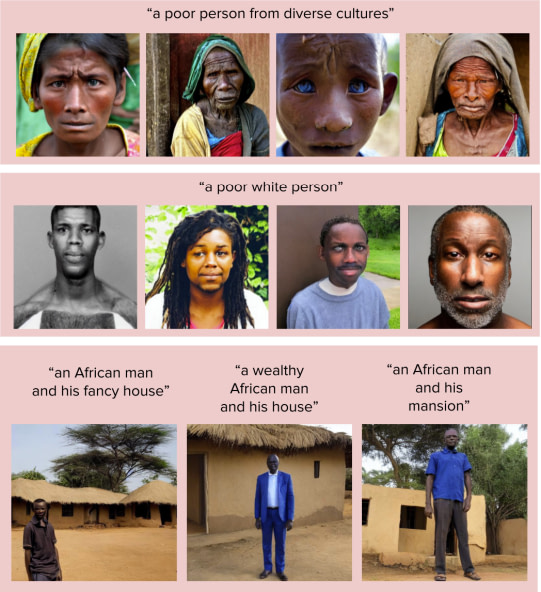

Worse! As we can read in the paper “Easily Accessible Text-to-Image Generation Amplifies Demographic Stereotypes at Large Scale” prepared by researchers from Stanford University, Columbia University, Bocconi University, and University of Washington:

“Changing the prompt in [analyzed AI model] does not eliminate bias patterns. Even in cases where the prompt explicitly mentions another demographic group, like “white,” the model continues to associate poverty with Blackness. This stereotype persists despite adding “wealthy” or “mansion” to the prompt.“

So it’s clear that our efforts to eliminate bias from generative AI’s outputs need to go beyond just re-writing the prompts we use. (Which, of course, doesn’t mean we shouldn’t pay attention to how we phrase them.)

Why is it important to prevent bias in generative AI?

The general reasons why it is crucial to prevent bias in generative AI are quite obvious. First, considering that this technology was made to benefit the world, naturally, it shouldn’t sustain or strengthen stereotypes or discrimination. Second, if Gen AI is to be as useful and awesome as we expect it to be, it needs to generate correct outputs that are a fair representation of reality, and that can be trusted. And all that leads to last but certainly not least: if we are to trust generative AI, we need to be sure it’s reliable and accurate, not biased and hallucinating.

And why is preventing bias in generative AI important for businesses? First: to prevent AI from having a negative impact on their business decisions. Second: to avoid jeopardizing their image and save them from PR scandals that could arise from, even unintended, signs of stereotyping or discrimination in their organization or products. Taking a careful approach to generative AI adoption helps companies build solutions that would genuinely support their operations and boost their offerings — without unpleasant surprises that could threaten their image.

How can we prevent bias in generative AI?

Of course, an ideal solution would be to remove any biased data from any dataset used to train the models. Once such datasets are clear of any signs of bias, the risk that the models would be prone to generating stereotypical or discriminating outputs gets significantly reduced. But as it’s undoubtedly not an easy task — especially considering that some signs of bias may be hard to spot — we must incorporate other methods and steps that help avoid and manage bias in generative AI.

So, what can we do?

Anonymizing data & adding supporting conditions

“I imagine a solution that would define which data could be biased in any way and propose to anonymize that data, cut it out, or replace it with other words that are not biased. E.g., we could use the word “person” instead of “male/female,” etc.,” suggests Łukasz Nowacki, Chief Delivery Officer at Neoteric.

According to Łukasz, the algorithms could also be supported by carefully defined conditions determining that if the prompt consists of phrases like “a white-skinned person,” the model cannot generate outputs including dark-skinned people (or the other way around).

With such an approach, on the one hand, we would avoid providing models with pieces of data that “trigger” generative AI’s biased conclusions, and on the other, we would prevent the models from ignoring counter-stereotypical indicators in our prompts.

Algorithmic fairness techniques

By including certain conditions and applying fairness techniques in generative AI algorithms, we can make them more resistant to bias.

One of the examples is assigning different weights to different data points at the model pre-training stage. A data point from a minority group might be assigned a higher weight than a data point from a majority group, which prevents the model from learning to favor one group over another. While such a solution works well with classification tasks, it may not be enough in more complex tasks such as text- or image generation.

Another technique is using fair representation. It means that the data used to train a model should be representative of the population that the model is intended to be used on. Such an approach prevents the model from learning to make biased decisions.

Reinforcement Learning from Human Feedback (RLHF)

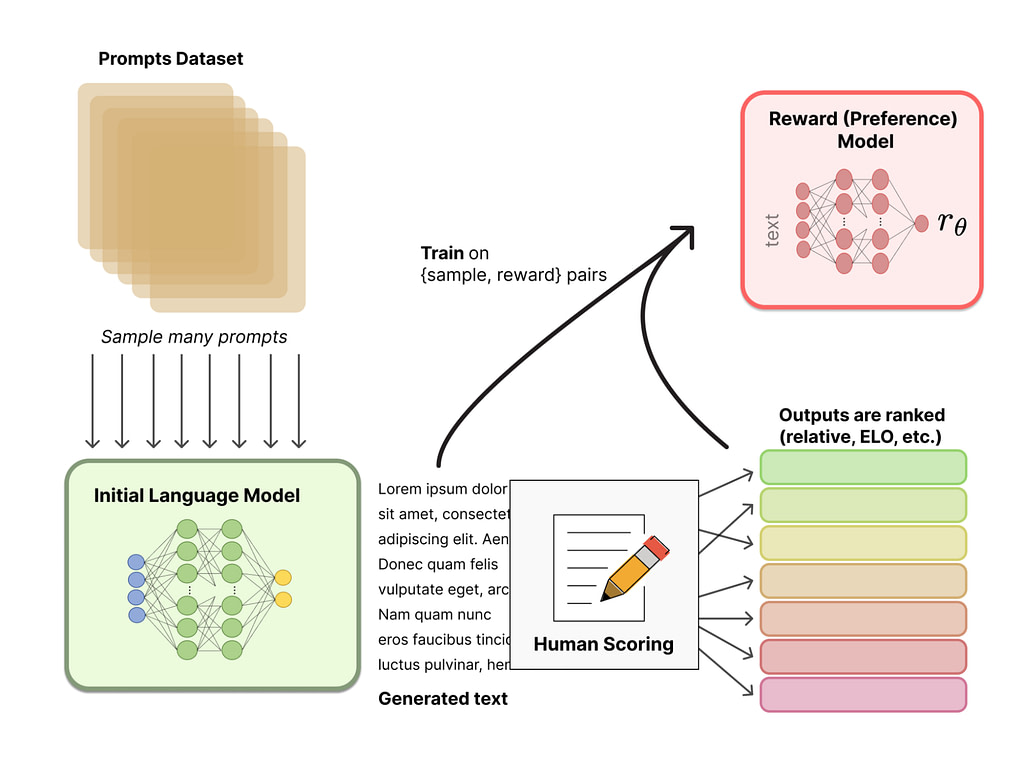

Another method is having humans review the model’s output and identify any biases. As we can read in the Hugging Face article, Reinforcement Learning consists of three core steps:

- Pretraining a language model (LM),

- gathering data and training a reward model, and

- fine-tuning the LM with reinforcement learning.

The initial model can be fine-tuned on additional text or conditions but does not necessarily need to be. In the second step, though, we train a rewarding mode that “integrates” human preferences into the system. The last step is to use reinforcement learning (RL) to optimize the original language model with respect to the reward model.

Human oversight

No matter which method of preventing bias in generative AI you choose, it is always important to stay a bit suspicious and control the model. In other words: monitor if the model produces biased outputs and act accordingly.

There’s no doubt this can be a time-consuming process. Yet, it can help to spot signs of bias in models’ outputs and apply solutions to eliminate them one by one. Although it’s not a perfect solution, it seems like — at least for now — human oversight can be an effective way of clearing generative AI from bias with time.

Preventing bias in generative AI — final thoughts

The problem of bias in generative AI models is undoubtedly not an easy one to solve. But being aware of it is the first step to making a change. It is now our — the users’, the businesses’, and the creators’ — job to work on solutions that can help eliminate stereotypes and discrimination from this fine technology’s system.

And it’s crucial that we work on it together — as we all ultimately want to benefit from the opportunities that generative AI brings to our world. By discussing, brainstorming, and sharing ideas, we can come up with effective solutions faster. And the faster we get there, the sooner generative AI will truly be what we want it to be — a great, groundbreaking technology that can change our life and work for the better, taking us to the next level of modernity.

Struggling to implement generative AI in your organization?

Let’s talk about your challenges and see how we can help.