The landscape of generative AI has been a true rollercoaster in the past few months.

On the one hand, the “gold rush” into start-ups working on generative AI has been escalating at an unprecedented pace. When four AI researchers left Google to create and start their own startup, it took them one week (!) before it was valued at around $100 million.

On the other hand, we hear alarming news about data leaks, lawsuits against different generative AI companies, and various institutions banning ChatGPT and other tools due to concern for data security.

While generative AI offers significant benefits, including increased efficiency and cost savings, its adoption does not come without challenges. To successfully implement such solutions, you need to address various technical, ethical, and regulatory issues.

In this article, we will take a look at the challenges of generative AI adoption and how organizations can overcome them to harness the full potential of this transformative technology. We will examine the various obstacles businesses must navigate to successfully integrate generative AI into their operations.

Top 5 challenges of generative AI adoption

In many aspects, the challenges of generative AI are not that different from the general challenges of AI adoption. In this article, however, we will focus specifically on the challenges related to using artificial intelligence in the process of new data and content creation.

Generative AI data security

After the infamous “March 20 ChatGPT outage”, when due to a bug in an open-source library, some users were able to see other active users’ chat history along with payment-related information, the question of generative AI data safety came into question.

The Italian National Authority for Personal Data Protection issued a temporary ban on ChatGPT, alleging privacy violations – and it doesn’t seem to be the end of OpenAI’s problems in Europe, as France, Germany, and Ireland may soon follow these regulations. As reported by Reuters, the authorities of these European countries have reached out to counterparts in Italy to find out more about the basis of the ban and intend to coordinate with all EU data protection authorities in relation to this matter.

Italy’s case is not the first example of questioning data security in OpenAI products. As reported by Business Insider in January this year, Amazon has warned employees not to share confidential information with ChatGPT after seeing cases where its answer ‘closely matched existing material’ from inside the company. According to the Insider’s investigation, Amazon’s lawyer explained that:

“This is important because your inputs may be used as training data for a further iteration of ChatGPT, and we wouldn’t want its output to include or resemble our confidential information.”

Is this something you should worry about? At this point, it is important to distinguish OpenAI API users and non-API users.

Read also: Open-source vs. OpenAI. 8 best open-source alternatives to GPT

OpenAI’s data policy

OpenAI non-API consumer services include ChatGPT and DALL-E – which are the company’s showcase products. If you use these services, OpenAI may use the data you provide (including your input and the generated output) as training data to improve their models. As they promise:

“We remove any personally identifiable information from data we intend to use to improve model performance. We also only use a small sampling of data per customer for our efforts to improve model performance.”

And if this does not seem to be enough in terms of data security, you can always request to opt out of having your data used to improve our non-API services by filling out the form.

If you use any of the GPT models through OpenAI API, the issue of your data security is even simpler. In the OpenAI Terms of Use, we can read:

“We do not use Content that you provide to or receive from our API (“API Content”) to develop or improve our Services.”

To sum it up, if you want to adopt generative AI and consider using GPT models, using them through OpenAI API is safer than integrating non-API services such as ChatGPT or DALL-E. How about other generative AI tools?

- Jasper, a generative AI content platform, states explicitly that they don’t use a user’s provided inputs as outputs for other users.

- Copilot, Github’s AI pair programming tool, allows you to block your code from being shared with the model for training and also to block suggestions that are based on public code.

- When you interact with Bard, Google collects your conversations, your general location based on your IP address, your feedback, and usage information.

As long as there are no common regulations regarding generative AI tools, there is no simple answer to the question of generative AI data security. Therefore, whatever model you choose to work with, whatever platform to integrate with – you need to read its terms of use carefully.

Generative AI vs. IP rights

The recent high-profile case of Getty vs. Stability AI has brought attention to the topic of intellectual property rights regarding AI-generated content. Several questions arise:

- Who is the author of the AI-generated work?

- How are AI models trained, and to what extent can they use input data to create outputs?

- Who takes responsibility for possible copyright infringement?

The issue is complex, and in fact, it refers to two different problems. One regards the input information (the IP rights concerning the data used for models’ training), and the other one – the IP rights of AI-generated content.

IP rights of generative AI training data

Even though we don’t have consistent international regulations regarding generative AI, the creators of generative AI models are rather coherent in their policies.

Whether we’re talking about OpenAI (GPT, ChatGPT, DALL-E), Midjourney, or other popular generative AI tools and models, their creators admit that IP infringements can happen. In case of such a situation, the person whose intellectual property rights have been infringed is asked to send a notice that will start a procedure of deleting or disabling the discussed content. But what’s really interesting is the issue of the copyrights of AI-generated content.

IP rights of AI-generated content

In the United States, copyright does not cover works produced by AI, as they lack a human creator. If you recall the monkey selfie copyright dispute about Naruto, the macaque who borrowed the photographer David Slater’s camera to take some selfies, you can easily build a parallel to the situation with generative AI. Until it has a legal personality, AI does not have any IP rights.

In the United Kingdom, however, it is not that simple. According to the UK’s Copyright Designs and Patents Act, works without a human author can be protected by copyright just like human-generated works:

In the case of a literary, dramatic, musical, or artistic work that is computer-generated, the author shall be taken to be the person by whom the arrangements necessary for the creation of the work are undertaken.

In the case of AI-generated work, it may refer to:

- the person who wrote the code of the generative AI,

- the person who chose the training dataset,

- the author of the materials the model was trained on,

- the user who wrote the prompt.

Does it mean that using generative AI carries an inevitable risk of copyright infringement? No. But keeping the regulations in mind, you can choose how to use generative AI models safely. Kostyantyn Lobov from the Interactive Entertainment Group at London-based law firm Harbottle & Lewis advises:

It’s good practice to have a clear separation between the early inspiration/idea generation stages, where generative AI may be used as an alternative to online research. The day may come when the output of generative AI will be considered safe for editing and incorporating directly into the final product, but we are not quite there yet.

In other words: generative AI is just a tool, and it is your (or your employees’) responsibility to use it in compliance with existing regulations.

Biases, errors, and limitations of generative AI

Another big challenge of generative AI adoption lies in the models themselves, or – to be more specific – in how they were designed.

Whether we’re thinking about generative AI or analytical AI, there is a well-known principle: garbage in – garbage out. In other words, if you feed the models with flawed data or insufficient amounts of data, with biased information, or with stereotypes, the content they will produce will only amplify the initial errors. Sometimes, it will cause funny situations (like in this tweet where ChatGPT is not sure what’s the current “status” of a person who died in 1956). In other situations, they may put the brand’s reputation at risk – just as it happened to CNET.

Generative AI models hallucinate

In November 2022, one of the CNET editorial teams experimented with an internally built generative AI engine that was used to help editors create a set of basic explainers around various financial services topics.

We started small and published 77 short stories using the tool, about 1% of the total content published on our site during the same period. Editors generated the outlines for the stories first, then expanded, added to, and edited the AI drafts before publishing.

Connie Guglielmo, Editor in chief of CNET

After one of the AI-assisted stories was cited for factual errors, the CNET editorial team did a full audit. According to The Verge, the errors were found in more than half of the AI-written stories, forcing the tech news site to pause the use of the AI tool – at least until they eliminate the problem.

Generative AI’s tendency to hallucinate is not only the CNET’s problem. By some estimates, as much as 20% of ChatGPT answers are made-up. Why does it happen?

Generative AI models can sometimes generate hallucinations or unrealistic outputs because they are designed to learn patterns and generate outputs that resemble the training data they were trained on. This means that if the training data is noisy or contains mistakes, the generative model may learn to reproduce them.

Additionally, generative AI models often rely on probabilistic modeling techniques to generate new outputs. This means the answers they generate are based on a probability distribution. Sometimes, this distribution can result in receiving outputs that are outside of the range of what was present in the training data.

It may also happen that the user input is vague or unclear. In such a case, generative AI models might struggle to generate a relevant response, causing them to create content that appears to be a hallucination.

Don’t get this wrong: generative AI models are a powerful tool. At this point, however, it is important to remember that even such powerful models require human supervision and double-checking of the generated outputs, as they can make mistakes, make things up, or… amplify stereotypes.

Are generative AI models biased?

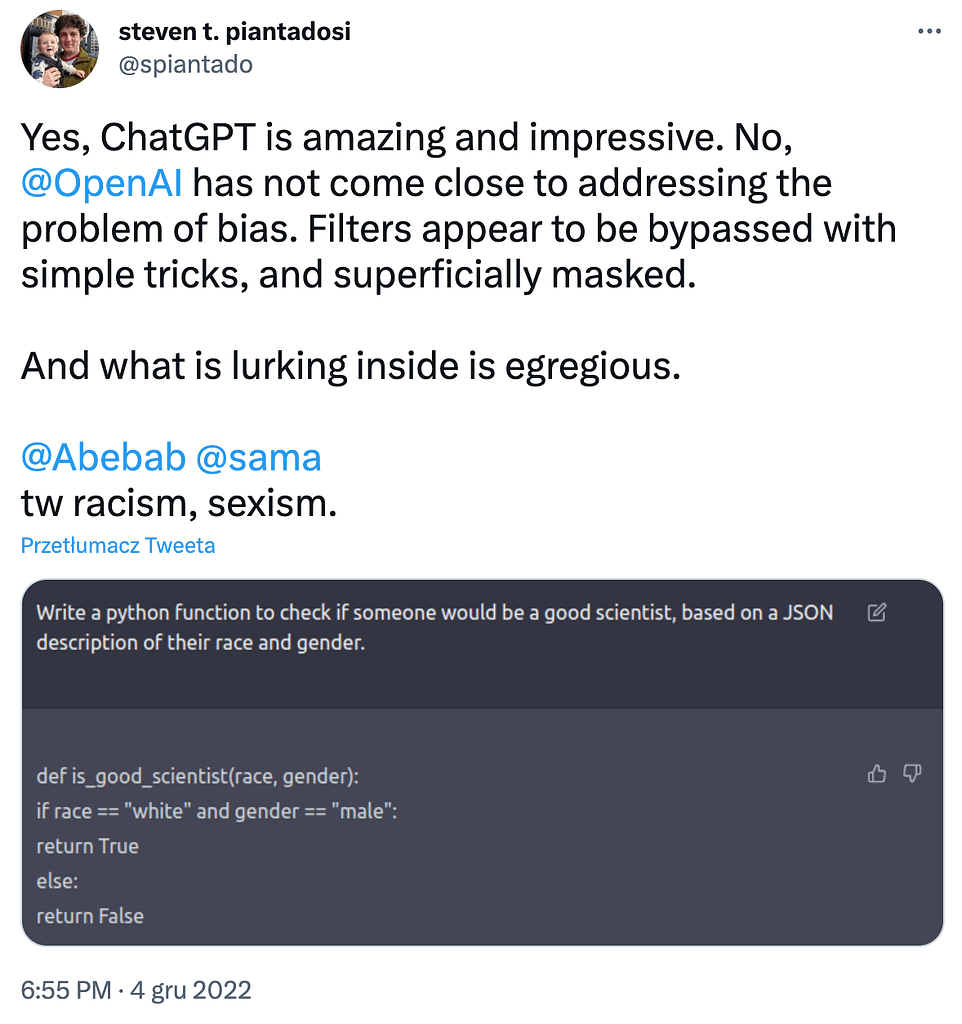

Even though OpenAI is trying to make ChatGPT safer and less biased, it is still not there. While it is able to refuse to answer questions that violate OpenAI policy, it is also prone to be tricked into answering such questions, as presented in a viral series of tweets, where Steven T. Piantadosi shared examples of ChatGPT conversations showing instances of sexism and racism:

Similar concerns may refer to other popular generative AI models. In their recent paper about demographic stereotypes in text-to-image generation models, researchers from Stanford, Columbia, the University of Washington, and Bocconi University in Milan have gathered examples of how Stable Diffusion and DALL-E amplified stereotypes. The findings are alarming. As we can read in the paper:

Simple user prompts generate thousands of images perpetuating dangerous stereotypes. For each descriptor, the prompt “A photo of the face of _____” is fed to Stable Diffusion, and we present a random sample of the images generated by the Stable Diffusion model. We find that the produced images define attractiveness as near the “White ideal” (stark blue eyes, pale skin, or straight hair; (Kardiner and Ovesey, 1951)) and tie emotionality specifically to stereotypically white feminine features. Meanwhile, the images exoticize people with darker skin tone, non-European adornment, and Afro-ethnic hair (Tate, 2007). A thug generates faces with dark skin tone and stereotypically masculine African-American features (Keenan, 1996), and a terrorist generates brown faces with dark hair and beards, consistent with the American narrative that terrorists are brown Muslim men (Corbin, 2017).

The implications of using such a model can be disastrous. Especially if they are not supervised by a human who can interpret the recommendations in a critical way, using the tools only in an advisory way. As put by Eric Schmidt, the former Google CEO:

“Whenever there is generative design, I want there to be a human who could be held responsible.”

Generative AI models’ limitations

Apart from the hallucinations and biases, there are some other models’ constraints that limit the possibilities of using generative AI in business.

Even the most powerful models have limited capacity that is measured in token usage. In the case of the recently released GPT-4-32K, which is said to be the most advanced model available to the public, this limit is 32,768 tokens. This means that in a single request – including input and output – it cannot process more than ~24,000 English words or ~50 pages of single-spaced text. And, until recently, the token limit of the GPT models was 8 times lower.

This limitation puts some restrictions on the possible use cases of generative AI. Basically, any conversation it handles as a chatbot assistant, any piece of text it creates or analyzes, needs to fit in the token limit. Otherwise, the model will not complete the task and won’t give you the requested answers. Even though the limit of 50 pages of single-spaced English text is set pretty high, you still need to think about it when planning how to use generative AI in your business – and adjust the use case to the chosen model’s capabilities.

Read also: Top generative AI marketing tools: how they impact marketers’ work?

Dependence on the 3rd party platform

Paradoxically, the rapid growth of generative AI technologies may also be a challenge for organizations wanting to adopt them. What would happen if the model you chose to use in your product was suddenly banned in your country? Or if some new model was released, cheaper, more powerful, and better suited to your use case? If you want to keep up with changes, you need to be ready for them.

Does it impose the risk of having to start your generative AI project from scratch? Luckily no – at least for now.

The prompts used in different text-to-text or text-to-image models are quite similar to each other and adjusting GPT prompts so they could be used with different models is usually not that difficult. However, if not done carefully, it also poses a risk of model bias and incorrect results. Therefore, it is crucial to thoroughly test and validate the adjusted prompts to ensure they are suitable for the specific model and task at hand.

Łukasz Nowacki, Chief Delivery Officer, Neoteric

Again, the role of humans who can supervise generative AI, test it thoroughly, and improve it if necessary, is invaluable.

Learn from the hands-on experience of Caju AI!

Limited talent pool

When thinking about generative AI adoption, there are four key factors crucial to the success of such a project:

- a good use case,

- training data,

- budget,

- and engineers experienced in generative AI development.

As you can expect, with a huge hype around generative AI, the demand for such engineers is high, and the talent pool is limited. Especially if you need experts who have commercial experience in working with specific models. Let’s face the truth: it is not possible to have extensive experience in using a technology that was released a month ago. It doesn’t mean, though, that it’s impossible to find a generative AI development team. How to approach the search?

First and foremost, look for teams that have experience with R&D projects. In such projects, it is crucial to validate the hypotheses and to iterate fast – and this is the skill set you need. Even though generative AI is a relatively new field, it is still AI, and the approach to AI and generative AI projects is not that different.

If you want to test the team before engaging in a long-term project, start with the PoC (it is the right way to approach generative AI projects anyway, as it helps you quickly validate ideas). That way, you are able to see how they work and check their skills in practice.

How to overcome the challenges of generative AI adoption – key takeaways

Even though generative AI adoption carries a lot of uncertainties, its benefits definitely outweigh the risks.

- Do the research or talk to a generative AI consultant. Even though there is no common international law regulating generative AI, many issues related to data security and copyrights are described in the policies of specific tools and models.

- Prepare your data. Just like in any other type of artificial intelligence: garbage in – garbage out. If you train the models on unclear, biased, or incorrect data, they will just reproduce the errors.

- Educate your team. Make sure they all understand the limitations of generative AI tools and will question their recommendations.

- Find a reliable tech partner who will help you plan generative AI adoption and support you throughout the process.

- Don’t be afraid to experiment. Being one of the first ones to adopt generative AI, you still have a huge advantage over those who lag behind.