The trust we have for any technology is usually limited. With artificial intelligence, we can be tempted to be even more skeptical: if it’s to display intelligence, the bar is set high. The problem can grow when we consider that there’s no clear, uniform definition of human intelligence. If the original is not fully understood, requirements for the imitator are not clearly defined.

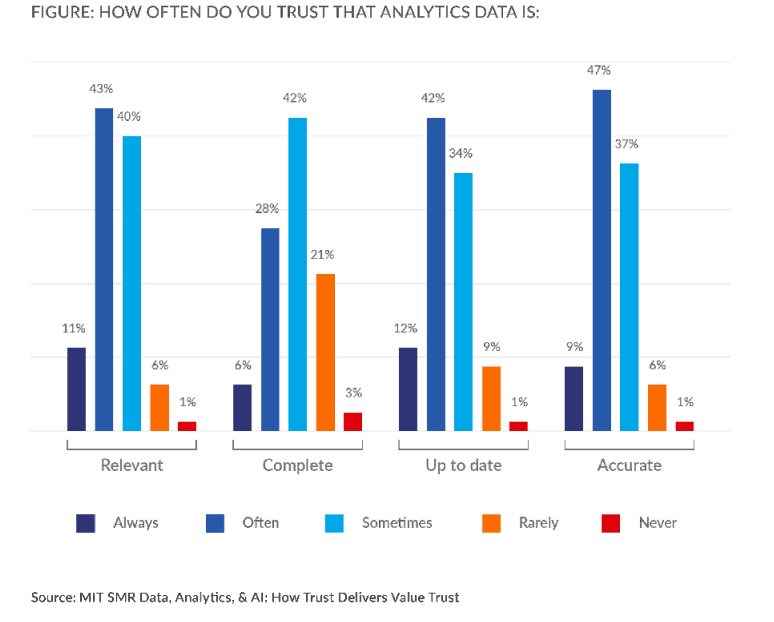

Research proves that we have problems when it comes to trusting data. The MIT Sloan Management Review and SAS survey found that only 9% of business executives always trust that data is accurate. While trust in data is growing, the group of people that still are reluctant to fully trust it remains large, as shown in the chart below.

How much can we trust AI?

The most common scenario is that people don’t trust AI, which reflects their unwillingness to trust data. There is a variety of reasons why people are skeptical when it comes to artificial intelligence and how much it can be trusted. It’s a technology difficult to grasp, and there’s no trust without understanding. There’s bias, unfair treatment. So why exactly don’t we trust the decisions made by AI?

We don’t trust what we don’t understand

Artificial intelligence is a complex concept and is still often misunderstood. While various materials: magazines, books, videos, online courses, offer great educational value on what AI is, how it works, and what limitations it has, many popular media outlets and movies still use the “scary robot” theme to make people worry about basically every aspect of their lives. Losing our jobs, having to let machines decide about important aspects of our lives (getting a mortgage, the way of treating a disease, qualification for medical procedures, sentences in legal cases), or, in a sci-fi scenario, being ruled by the superintelligent machines.

Looking at the general understanding of AI as a technology, it’s clear that education is crucial to help people gain more knowledge of the subject and get rid of the abstract, futuristic fears. That doesn’t mean the fears will stop being there, and it also doesn’t mean that AI can’t be used for bad reasons – however, it’s a technology, and as such it’s a tool. It’s not conscious, it’s not evil, it has no opinions and intentions.

Read also: What is the difference between artificial intelligence and machine learning?

There is another important element in terms of understanding AI: the black box issue. In many cases, it’s enough for us to give the model some data, we ask for some results, and we get them. Voilà. We don’t need to follow the decision-making process inside the model, it’s enough for us to know that it’s done based on the data, and we can later verify how accurate the model’s results are. However, there are cases when we can’t just trust the model.

Imagine you go to see a doctor, the doctor takes down your symptoms, examines you, and runs your medical results through an AI system. It’s just a cold, that’s what the doctor said, and AI confirmed it. So far, so good. But the doctor also uses AI to suggest treatment methods, and AI wants to have you hospitalized and receiving antibiotics. Huh. You don’t feel that bad, it’s just a cold, you really don’t want to go to the hospital and hang out with people who are much more seriously ill. No need to catch another disease. And antibiotics, really? It’s just a sore throat and runny nose!

Artificial intelligence solutions have proven to be an effective diagnostic aid, but unfortunately, they can’t handle everything just as well. In many cases, AI’s decisions on how to treat a patient will be correct, but if it’s wrong, it can influence the patient’s well-being. Think about bad decisions for the treatment of cancer, epilepsy, or other serious diseases. Or judicial decisions: who goes to jail, who can be let out with bail, how severe the sentence will be. Or even banking: will you get a loan? Whatever the decision, you should be able to know WHY. When there’s no explanation as to why a certain decision was made, and it doesn’t align with what we think is right, the distrust builds up. However, there are approaches that aim to increase model transparency, such as LIME (local interpretable model-agnostic explanations), which I described in the article 12 challenges of AI adoption.

Data is just stats, not reality

Because we are surrounded by lots and lots of data and stats, and we see how people manipulate the numbers and facts to make them match their controversial, or plain wrong, views, our trust in numbers has gone down. Many people don’t receive a thorough education on how statistics works, they don’t understand probabilities, patterns, or even poll results. We either think the numbers speak the truth or we think they’re garbage. And the truth lies somewhere in between. It’s hard to work out the probability of something happening, predict future outcomes when all we have is a couple of instances – data points. However, with big data come big possibilities. Analyzing large volumes of data helps us understand the past patterns and figure out what will happen next. This way, we can predict customer churn, product demand, machinery maintenance needs, and so on. But it’s about a group, not an individual. When we learn to understand numbers and facts better, we can also gain insights into what AI does with data. It’s not as simple as calculating the average height of people within a group, but it’s still analyzing data and deriving conclusions from it. Data reflects the reality to a large extent, it’s not just made up information.

Algorithmic bias

Bias is a serious issue, but it’s not solely algorithmic. And with bias, the problem is more complex: when you trust AI too much – you’re wrong, when you question AI too much – you’re also wrong. Bias creeps into many relevant aspects of human lives. Amazon’s AI recruiter was biased towards women and would favor men. COMPAS, an algorithm used in the US to guide sentencing by predicting the likelihood of reoffending was racially biased and predicted that black defendants posed a higher risk of recidivism than they did in reality. It did the opposite for white defendants. Facial recognition software is to some extent used to recognize suspects, yet it struggles to accurately recognize faces other than those of white men, which increases the risk of misidentification of people of color and women. And even simple everyday examples reflect bias: Google “CEO”, go to images, and you’ll see a vast majority of men. Google “assistant”, and you’ll see, obviously, a vast majority of women.

However, algorithmic bias doesn’t just come from nowhere. Bias is deeply ingrained in our minds and reflected in data. If a company for years has been repeatedly choosing men over women in recruitment processes, that’s what AI will make out of the data: men are better. If we punish black people more severely, arrest them more often, AI will see that they’re more likely to be criminals. And if you don’t represent a minority yourself, you may not even think whether your training dataset is representative of the entire population.

Technology can be too good – or too bad

Over the years, we’ve learned to trust the GPS. The system is rather infallible, right? We just follow its directions, especially when we’re in a place we don’t know well. That was also the case of Robert Jones, who had a GPS mishap in 2009. He was driving where the GPS directed him, and even though he could see the surroundings were changing and did not match what GPS was showing – a road which in real life turned out to be a steep and narrow path – he did what GPS suggested. Suddenly, he hit a guardrail and stopped, just to find his car hanging off the edge of a cliff. Jones reportedly said: “I just trusted the satnav. It kept insisting that the path was a road even as it was getting narrower and steeper”. Of course, it was 2009, and technology (not just GPS, technology in general) has improved and developed. It seems like we either distrust technology and try to stay away from it, or get so accustomed to it that we stop questioning its decisions. Whether AI is very good or very bad, we find both scenarios disturbing. When a system seems to make no mistakes, it just doesn’t seem right. And if it makes quite a lot of mistakes, we’re quick to give up on it.

Read also: What can AI do for your business?

Bridging the trust gap

To solve this problem, we have to approach it in two ways: build AI systems we can trust, and learn when and how to trust AI. To build trustworthy, reliable AI, you need to make sure it’s fair – or “responsible”. However, many of the issues AI is dealing with give rise to ethical questions. Unfortunately, ethics cannot be outlined as simple step-by-step rules. AI is not aware of what’s right and what’s wrong, so it can’t stop itself from making unfair decisions. There are tools developers can use to ensure high standards of the models, and there are organizations that focus on fighting the issues of AI, like the Algorithmic Justice League. And there are also steps companies can take to help bridge the trust gap:

- Educate the staff

- Develop guidelines and standards

- Increase model transparency

- Ensure quality assurance standards

These steps may seem simple, but they will take time and effort to implement. In the end, however, it will all pay off, since we’ll make our AI better, and our coworkers more knowledgeable and confident.