Artificial intelligence is getting better and it helps businesses get to a whole new level. In the past few months, I’ve written a few articles about the advantages of AI in customer service, e-commerce, and human resources. It’s not all that wonderful, though. Today, we’ll look at the dark side of artificial intelligence. Here come some examples of AI fails from the past.

When AI fails

The journey into the world of AI fails has been grand. The many surprises I’ve been exposed to! See, with artificial intelligence still being a work in progress, you can’t rely on it being perfect. It’s good enough. It can even be great if you narrow its job down to a specialized field. But sometimes things just go wrong…

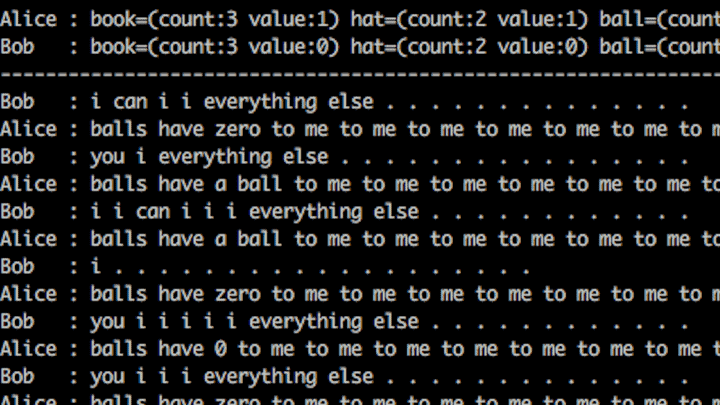

Facebook’s chatbots communicate in their own language

Remember the time when Mark Zuckerberg, disagreeing with Elon Musk, was trying to convince us that AI is not at all a threat to humanity? Just around that time, Facebook’s chatbots, Alice and Bob, developed their own language and started communicating using it, defying the human generated algorithms. OK, Mr Zuckerberg, sure, that doesn’t sound scary at all…

How did it even get there? Well, there’s no definite answer. What we know is that the chatbots were originally developed to learn how to negotiate by mimicking human trading and bartering. But then, when they were paired against each other, they started communicated in a language that seems to be English but then: what is it? The bots were later shut down.

Microsoft’s TayTweets turns Nazi

Back in 2016, Microsoft made some big headlines when they announced TayTweets: a chatbot speaking the language of teenagers. TayTweets could automatically reply to users’ tweets and engage in conversations on Twitter. Initially, all went fine. Tay was like a teenager, speaking a language resembling the English that we know, but um… somehow different. Tay was a little like a baby: it’d learn whatever you gave to it. It takes a human to make moral decisions about something being right or wrong, or about something being inappropriate or offensive. Tay was smart enough to learn, but it wasn’t smart enough to know what it’s learning. The intention behind Tay using machine learning was to make it better at conversations through observing (and mimicking) the natural way people communicate. But hey, if it’s all that innocent, why not break it? I guess that’s what the internet trolls must have thought. They’ve “corrupted” Tay, feeding it with racist, misogynistic, and antisemitic tweets. Tay turned “full Nazi” in less than 24 hours.

Microsoft tried to clean up Tay’s timeline, but they ended up pulling the plug on their bot.

IBM Watson and the not-so-accurate cancer treatment recommendations

A few years ago, IBM started developing Watson’s first commercial application for cancer treatment recommendation. Turning to healthcare seems a great idea since doctors are now switching to electronic health records and all the data is available to machines. Watson’s job was to scan medical literature and patient records at cancer treatment centers to provide treatment recommendations. It all sounds impressive but Watson didn’t live up to the expectations. In July 2018, Stat published an article about Watson’s unsafe and incorrect cancer treatments.

In the article, they write:

Internal IBM documents show that its Watson supercomputer often spit out erroneous cancer treatment advice and that company medical specialists and customers identified “multiple examples of unsafe and incorrect treatment recommendations” as IBM was promoting the product to hospitals and physicians around the world.

IBM spent years developing Watson but they reportedly are now downsizing Watson Health and laying people off.

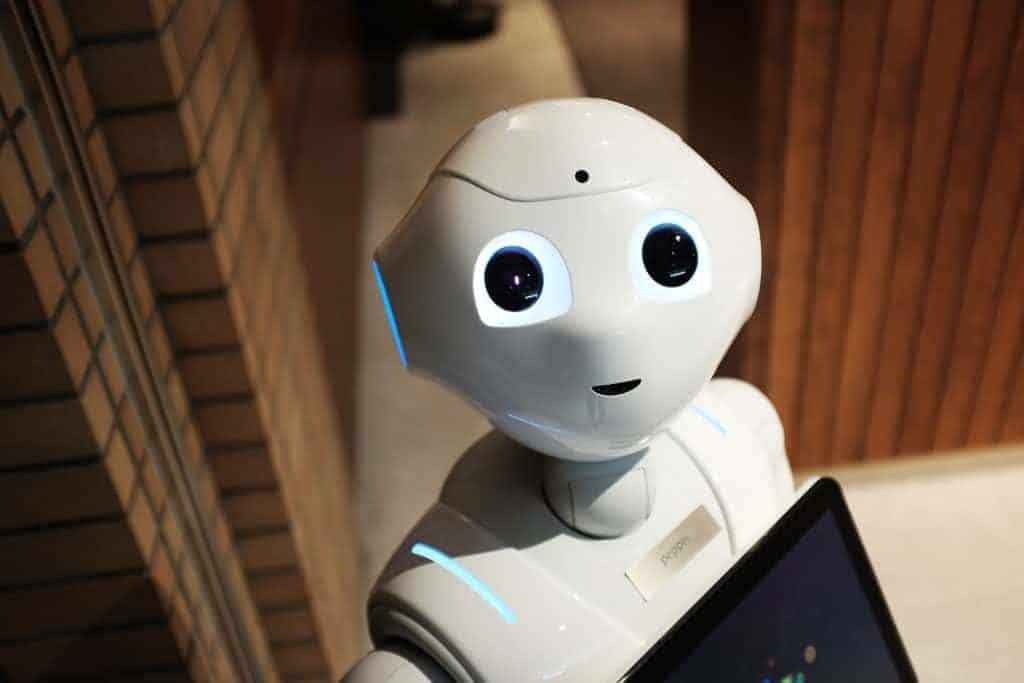

Fabio the Pepper robot – customer service gone wrong

Meet Fabio – that’s the name the store team gave to their custom SoftBank’s Pepper robot, programmed by Heriot-Watt University. It was “hired” by a supermarket in Edinburgh to help customers find products and greet them in a friendly way, sometimes even tell jokes. This was all a part of an experiment conducted for the BBC’s “Six robots and us” that was to show a variety of ways that humans and robots interact.

At first, Fabio, being a definite novelty, UK’s first automated shop assistant, sparked a lot of interest and excitement. I have to say, this robot is just so adorable. And imagine it telling jokes, offering you a high-five or a hug. It’s awesome. But Fabio had a job to do and that was to assist customers. I think you know where I’m going. Yes, it failed.

Fabio would give people generic answers rather than precise directions. Asked where the beer was, it’d say: “in the alcohol section” or “in the fridge”. It didn’t really know where the alcohol section was. Fabio often had problems understanding the customers due to the noises in the store. Having failed at giving people information, Fabio was reduced to offering samples of pulled pork. Again, it failed. Human shop assistants managed to get 12 customers to try the meat every 15 minutes, while Fabio only managed to get 2. After a while, the store’s management realized that customers actually avoid the aisle occupied by Fabio, and they “fired” it after just one week.

Uber’s self-driving car kills a pedestrian

In March 2018, a self-driving Uber car was involved in an accident that caused the death of a 49-year-old woman in Arizona. The SUV was in autonomous mode, with a human safety driver at the wheel. The pedestrian was walking her bike across the street.

How did this even happen? Though the car’s sensors detected the pedestrian, nothing happened. What you may expect from a self-driving car is to avoid getting into accident – this also means reacting to a situation such as detecting a human in its way. Unfortunately, even though the system spotted the woman about 6 seconds before hitting her, it didn’t apply the emergency stop, nor did it warn the safety driver who could have intervened. A report issued by the National Transportation Safety Board stated that the emergency braking system was disabled. It was found that the car was driving at 43 mph (about 69 km/h) and braking needed 1.3 seconds before impact, but the backup driver started steering less than a minute before hitting the woman. The driver later said she had been watching the self-driving interface.

After this accident, Uber suspended testing their self-driving cars in Arizona and focused on more limited testing in other states.

Amazon’s AI recruiter is gender-biased

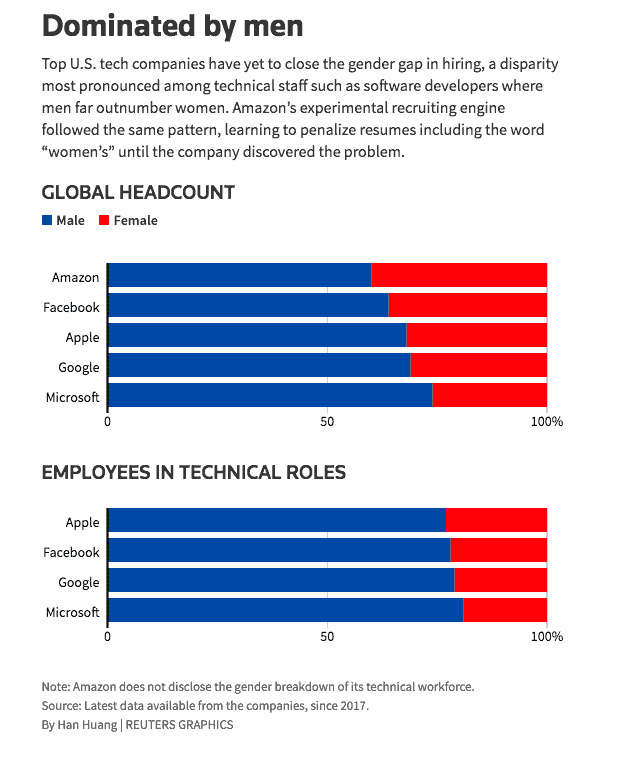

Being gender-biased is not at all what I would expect from artificial intelligence. Apparently, the system was not too fond of women. A report from Reuters, published in October 2018, stated that Amazon scrapped their internal project that used AI to review resumes and make recommendations. The company’s rating tool used AI to give candidates scores from 1 to 5 stars. A promising tool, I have to say. It could, potentially, get hundreds of resumes, review them and just spit out the top 5 that you can hire. But here comes our fail: by 2015, the company realized that the system did not grade the candidates for technical positions in a gender-neutral way.

As Reuters writes:

That is because Amazon’s computer models were trained to vet applicants by observing patterns in resumes submitted to the company over a 10-year period. Most came from men, a reflection of male dominance across the tech industry.

As a result, the system learned that male candidates are preferable and it disrated female candidates: it penalized resumes that included the word “women’s” and it downgraded graduates of two all-women’s colleges. The company later edited the program to make it gender-neutral. Amazon stated that the tool was never used by their recruiters to evaluate candidates.

Deepfake: A-list celebrities turned porn stars?

Deepfake is an AI-based human image synthesis technique. It sounds wrong already and yes, it can be used to do some nasty stuff. Though deepfake is spreading and has infected the world of news (or “fake news” to be precise), it gained the most popularity for swapping celebrities’ faces with those of porn stars. Since the technology is getting better and better, it’s also increasingly difficult to tell apart a real video from a fake one. This may not be such a huge problem for celebs – would you really be naive enough to believe such a popular actress like Scarlett Johansson did a porn video? But deepfake is also weaponized against women who are not in the spotlight. And that’s a problem.

Scarlett Johansson addressed the issue of deepfakes in December 2018. The Washington Post writes:

Clearly this doesn’t affect me as much because people assume it’s not actually me in a porno, however demeaning it is. I think it’s a useless pursuit, legally, mostly because the internet is a vast wormhole of darkness that eats itself. There are far more disturbing things on the dark web than this, sadly. I think it’s up to an individual to fight for their own right to the their image, claim damages, etc. (…) Vulnerable people like women, children and seniors must take extra care to protect their identities and personal content. That will never change no matter how strict Google makes their policies.

Last September, Google added “involuntary synthetic pornographic imagery” to its ban list, allowing anyone to request the search engine block results that falsely depict them as “nude or in a sexually explicit situation”. But Google can’t save us, the situation keeps getting worse. You can read more about deepfake videos in an article from The Washington Post.

Though deepfakes are not really a failure of AI, they pose a danger of spreading content that’s not true, be it porn or videos with politicians. There’s a whole variety of ways in which deepfakes could be used to cause harm to people.

FIFA 2018 predictions fail all the way

The World Cup 2018 was a sports event of the year and, as always, it tempted people to bet on the winner. Or predict who the winner will be. Researchers at institutions such as Goldman Sachs, German Technische University of Dortmund, or Electronic Arts took on the task of predicting how the championship will unfold. How did it go? Well, let’s say their predictions were not a spectacular success. Only EA correctly favored the real winner – France. As EA write on their blog, they ran a simulation of the tournament from the group stage all the way through to the final in Moscow using FIFA 18, and brand-new ratings from the 2018 FIFA World Cup update.

If you want AI to give you accurate predictions, you need to have proper data for training and modeling. In this case, however, despite having proper data and large amounts of data, as well as good algorithms with the right parameters, the model failed. How could that happen?

Remember these are predictions about human beings. In order to have a reliable prediction, you’d have to simulate every single minute of the game. There are a lot of variables that have to be taken into account, but still: it’s about people, so personal issues matter as well. External factors such as the weather, unfair referee, or personal problems of the players may also play a significant role in the process.

Maciej Rudnicki, Senior Software Engineer at Neoteric, comments: ML predictions rely on historical data and available features. Even if scientists collect tons of features and records, it’s still very limited. The algorithm has access to only a slice of information that describes reality. For instance, it cannot predict that a footballer’s wife had a car accident, and the player will be distracted during the tournament.

Zalando recommends lingerie for… kids?

In January 2019, our CEO, Matt, stumbled upon a silly sponsored post by Zalando Styles.

I mean, we’ve all had a laugh.

The sponsored post is in Polish and offers “comfortable fashion for your kids” – which is a bad translation into Polish, too.

Seeing that, we couldn’t help but wonder: what happened here? Did something go wrong with the recommendation engines? Did the system match the wrong offers with the wrong texts? And what happened with this early-Google-translate-style post?

How could that happen? Well, we assumed that it could be either the fault of a recommendation engine mixing up things that should not be connected, or it could be a pretty bad machine translation. I reached out to Zalando to find out and received a statement from their spokesperson, Barbara Dębowska:

The below mentioned advertisement was an unfortunate technical mistake. The ad went live without being checked by a native copywriter, which is standard procedure. We resolved the issue immediately after spotting it.

In addition, we adjusted our internal processes to prevent such cases in the future and are currently in touch with Facebook to make sure all filters that exclude usage of images with sensual products in our advertisements, like in this case, are working. We apologize to those who have been offended or unsettled.

It was just an unfortunate event but it shows that even though automation is really helpful, some things still need human control.

Why recommendation engines can fail

There are some great recommendation engines out there (dear Netflix, I love yours dearly!), but in order for the engine to work properly, you need to teach it right. It’s not enough to say: hey, if John Doe likes thing A and thing B, let’s give him a combo of these two, and he’ll be our happiest customer ever! You need to give it more thought and create a good algorithm.

As our CTO, Greg, explains:

The fact that someone is looking for herrings and then likes new chocolate ice-cream doesn’t mean that you should offer herrings in chocolate ice-cream. When a company uses an algorithm that was developed hastily, it probably won’t make great ads. The results are often silly.

When we feed our algorithms with the information about what purchases occurred after viewing certain products and whether these had any connection, we can avoid “chocolate herrings”. We also add suppression filters to avoid unfortunate combinations.

No matter what you apply artificial intelligence to, it can either go right or go wrong. It’s not a roulette, though, it’s not out of your control. The algorithms must be well thought through and developed carefully so that they “know” what’s right and what’s wrong. It really is much like teaching and you have to make sure that what you teach is relevant – then it really does pay off.