This article is based on a presentation delivered during Neoteric AI Breakfast London. Read more about the event in the ebook “Scaling Digital Products with Data”.

Learn how to use predictive analytics to drive business results and revenue

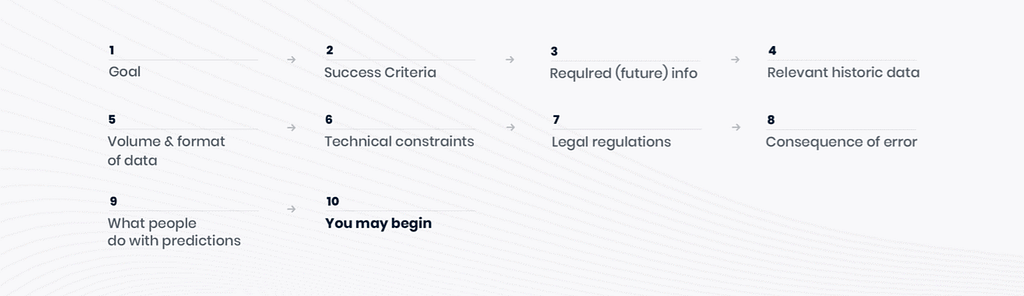

When you’re a beginner with AI, you might think: “So there’s this cool thing called AI, or machine learning, and I may find it useful”. But when you start, it’s better not to do it with machine learning right away. You don’t yet know whether you actually need it. Instead, you should start with identifying the goal. So why do you want this AI? Doing AI just for the sake of it is basically burning money on big boys’ toys, and not doing business. That’s not what we want.

Once you know what you want to do like predict churn, or prevent frauds, you need to specify your success criteria. You need to know what exactly it means that you did a great job, when the models and analytics work terribly, and when you’re somewhere on the middle ground. I’d say that in building models this is far more important than in any IT project. You need to be aware of how much you can improve the model, but also be able to tell when to stop. Perfection doesn’t exist.

So now you know what you want, and what “good” and “bad” in terms of results mean to you. Then, you need to identify what data and what decisions you need, e.g.: I’d like to know who wants to leave my company. Think about the future information that you might need to make good decisions about your current job, and then, what’s the historical data, preferably data that you already have, that can help you predict what will happen. Finally, there’s all of the analysis of what kind of data there is, where it’s available, whether it’s structured, not structured, semi-structured, or a complete mess. When you know what you need to do, what’s available and what’s not, there are technical constraints. With cloud, we say there’s unlimited computing power, but that’s not always the case. When you’re trying to build a model that works extremely fast, you need it at the edge. When you work at the edge, you have much more limited computing power, much more limited memory. You have to be mindful of the battery usage or you have no connectivity and you need the model working both at the edge and in the cloud.

Now, once we know what we can do, we have to think about what we should do. Just the fact that we have a bunch of data about our customers doesn’t mean that we should process that data. Let’s take the example of hospitals. You probably shouldn’t market to people based on their illnesses, that’s just not OK. With a media or an internet company, knowing what the users watch and what they view can also be a problem. Even when you tell them that you want to have insight into everything they do online to give them better ads – asking for something like that poses the risk of damaging the company’s reputation.

Finally, we need to know what happens when you make bad predictions. It’s probably not a bad situation when we’re looking at churners thinking “If I give this guy a discount, he’ll stay with us”, and if he still ends up leaving, that’s not a critical issue. But if it’s about driving a car, and the prediction goes “No, it’s not a tree” – and it is – the results are much more serious.

The last thing to do is to determine how these predictions will actually be used. Let’s take a look at an automatic light switch: we’re building an intelligent house system, we want the lights to go on and off automatically, and that’s the point where we have to decide whether the lights should actually go off automatically or maybe ping the person that they forgot to turn off the lights.

Starting an AI project

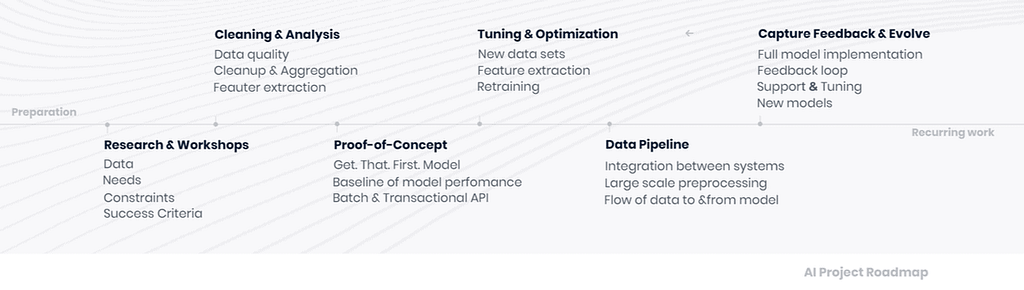

When you’re starting an AI project in your company, you need to do some preparations. You can start, for example, with a kind of a workshop. You will determine all of those details: the goal, success criteria, constraints, and so on, then you’ll have a look inside your data and you see that is not all that awesome – you need to make a good analysis of data to know where the mistakes are, what parts you’re missing, where you need to fill in the gaps.

The next step is to get the very first model – e.g. let’s predict that someone’s going to buy item X. Whatever you get on top of random guessing is you getting better and the model is already bringing you some cash. That’s where the success criteria come in. You can keep building the model for 2 years hoping that it would get better, or you can spend 2 weeks to get just a little bit better than random and then start improving based on the feedback on what’s actually happening – and I highly recommend starting earlier, not later. We’ve all heard stories of companies that were working in their basement on excellent products, but the market has already moved on. If you spend that much time on a model, and data changes much faster, you get nowhere. So first, you get to the proof-of-concept, then you put the model in the hands of people, and then you check how it works, add new data. When it’s working – scale the hell out of it. You can process not 10K profiles a day but like during the pilot, but a few hundred million – and it’s more powerful, and more fun. When you capture feedback – which is very important – you can improve the model. The predictions are not there just for the art of it, you need people to tell you whether these predictions are good or bad. Without the right feedback, there’s just no learning.

Let’s look at how it works in practice. One of our clients had a big problem: a whole bunch of their customers were leaving and there was nothing they could do. It’ a telecom company that has about 1 million customers paying them significant amounts of money for TV and internet subscriptions. So they needed to stop customers from churning but how? The process went like this: the goal was to reduce churn – that’s what you get to during the workshops. We were going for a 10% churn reduction without cutting down on revenue from customers, It would be pretty easy to reduce churn when you’re just giving stuff away to your customers. We determined that our criteria were to get to an 8% (or bigger) reduction – which is a success in terms of the initial project, we investigate anything between 5 and 8%, and under 5% – it’s a failure. The most important question in this process was: Which customers are leaving? Then you can learn why they’re leaving, what they do and don’t like, how much they’re willing to pay. The data we look when investigating that is the demographic information, purchase history, interactions with the customer service and sales. With such a set of data, you are able to build a decent profile of the people who use your services. Then you identify where all the data points are – there’s a system for sales, and a system for customer service, there’s the website – and there’s usually no easy way to connect all of that. Having all of that, we determined how the churn model would work and what the metrics would be. In case of churners, it’s necessary to catch all of them and to precisely point who the churner is – so we would get a list of these and send it over to the call center staff. The cool thing about looking inside the model and inside the decision trees was that they could see why the churners were about to leave, so they could prevent it but also change the way they did business, because they way they interacted with customers changed to more personalized.

It all sounds so pretty and simple. However, there are a few catches in here. First, there’s the accuracy. It’s easy to build a model that’s crazy accurate by always telling that no one will ever churn. If you’ve got 1% of churners every month, the accuracy is 99%. But this model is useless. Then, there’s something more tricky: bias in data. The way you train models is usually tied to the data you have. If you’re missing pieces of information e.g. due to the way you collect data, you can end up with a terrible model. Garbage in, garbage out.

Now that we know who’s about to churn, how do we stop them? Do we give them significant discounts? The talks with such a customer become difficult – especially in the face of losing a sales bonus. That’s where recommendations step in giving the staff a tool to negotiate with the “about to leave” customer. Recommendations were based on the information about how the customers like your products and the similarities between user preferences. So you’ve got a guy who likes products A, B, C, D, and E, and you’ve got a guy who previously liked all of them but E – there’s a high probability that he will also like product E. There’s a difficulty here, though, when you’re dealing with new customers that you have absolutely no information about. And how to get accurate ranking of products or services without explicitly asking customers whether they like something? It’s like with Spotify, which is not asking the users what they like. Instead, the app knows what you like by tracking how many songs of a genre you listened to, how long you’re listening to each song, whether you turn the volume up – that gives them information on what the rating should be without ever asking for actual rating. And with a recommender system, you also track results to check its performance: you compare the predictions with the actual number of items sold. The trend lines of both should be as similar as possible.

When we connected all of that, we were able to tell which customers wanted to leave and equip the staff with the right tools to prevent them from doing so, the marketing team could use the data to guide decisions and not make up other random product bundles, the consultants know who to approach and what to approach them with, and everyone got their bonuses with a higher efficiency of work and increased customer satisfaction. The end result: churn reduced by 24%.