The release of ChatGPT has caused a huge hype around the technology behind it: Generative Pretrained Transformer-3 (commonly known as GPT-3 and its later versions: GPT-3.5 and GPT-4). No wonder! Being able to perform NLP tasks with high accuracy, it can automate many language-related tasks, such as text classification, question answering, machine translation, and text summarization; it can be used for generating content, analyzing customer data, or developing advanced conversational AI systems.

If you’re reading this article, you’ve probably had a chance to play around with ChatGPT already or seen it in action featured on Youtube, blogs, and social media posts, and now you’re thinking about taking things to the next level and harnessing the power of GPT for your own projects.

Before you dive into all the exciting possibilities and plan your product’s roadmap, let’s address one important question:

How much does it cost to use GPT-3 in a commercial project? OpenAI API pricing overview

OpenAI promises simple and flexible pricing.

We can choose from four language models: Ada, Babbage, Curie, and Davinci. Davinci is the most powerful one (used in ChatGPT), but the other three still can be successfully used for easier tasks, such as writing summaries or performing sentiment analysis. The price is calculated per every 1K tokens. Using the Davinci model, you would pay $1 for every 50K tokens used. Is it a lot? As explained on the OpenAI pricing page:

You can think of tokens as pieces of words used for natural language processing. For English text, 1 token is approximately 4 characters or 0.75 words. This translates to roughly ¾ of a word (so 100 tokens ~= 75 words). As a point of reference, the collected works of Shakespeare are about 900,000 words or 1.2M tokens.

So for only $100, you are able to perform operations on ~3,750,000 English words, which is ~7500 single-spaced pages of text. However, as we can read further,

Answer requests are billed based on the number of tokens in the inputs you provide and the answer that the model generates. Internally, this endpoint makes calls to the Search and Completions APIs, so its costs are a function of the costs of those endpoints.

So our 7500 pages of text include input, output, and the prompt with “instructions” for the model. This makes the whole estimation process a bit tricky as we don’t know what the output may be.

To find it out, we decided to run an experiment. The goal was to check the actual token usage with the three sample prompts, understand what factors have an impact on the output, and learn to estimate the cost of GPT-3 projects better.

How to measure token usage in GPT-3?

The experiment involved combining prompts with text corpora, sending them to an API, and then calculating the number of tokens returned. The cost of the API request was then monitored in the usage view, and – since there is one request per one billing window limit – a wait time of at least 5 minutes was implemented. The cost was then calculated by hand and compared with the cost recorded in the usage view to see if there were any discrepancies.

The plan was simple. We needed to collect several corpora (~10), prepare the prompts, estimate token usage, and call an API a few times to see the actual results. Based on the results, we were planning to search for correlations between input (corpora + prompt) and output. We wanted to discover what factors impact the length of the output and see if we are able to predict the token usage-based only on the input and the prompt.

Read also: Open-source vs. OpenAI. 8 best open-source alternatives to GPT

Step 1: Estimating the price of GPT-3 inputs

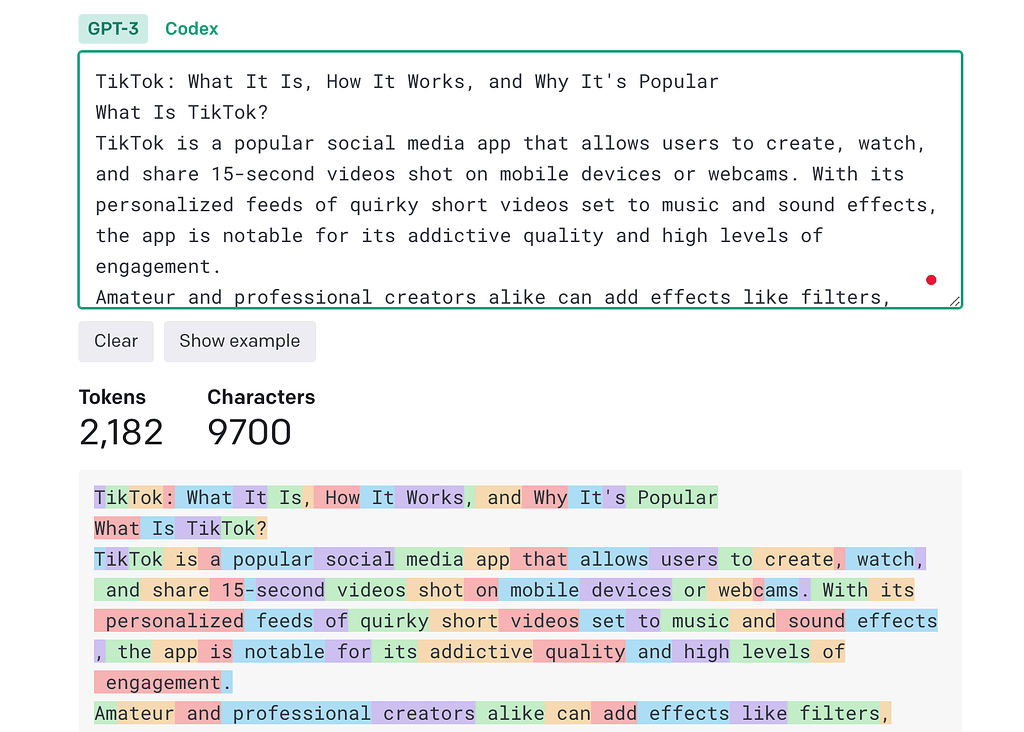

First, we wanted to check how accurate the information on the OpenAI Pricing page was. To do it, we took the results from the Tokenizer – an official tool provided by OpenAI calculating how a piece of text would be tokenized by the API and the total count of tokens in that piece of text – so we could later compare them with data from the usage view and actual billing.

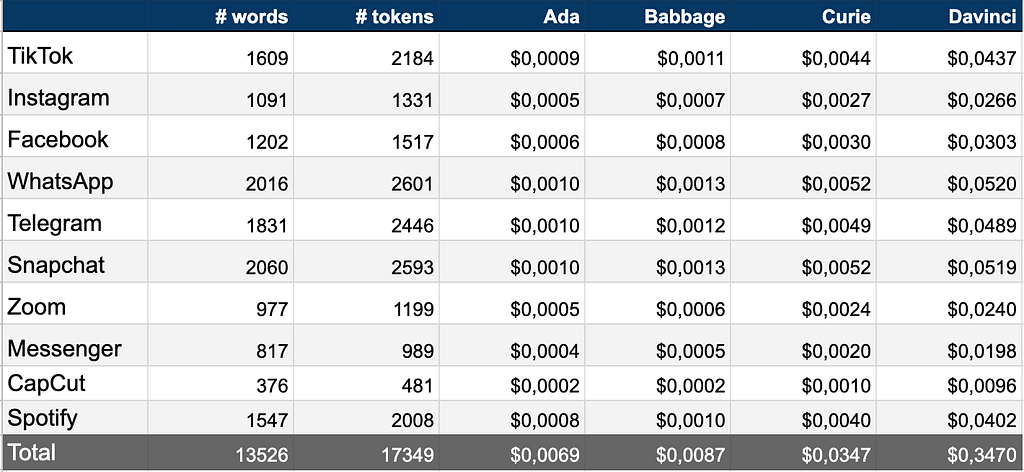

As our corpora, we took the descriptions of the ten most downloaded apps: TikTok, Instagram, Facebook, WhatsApp, Telegram, Snapchat, Zoom, Messenger, CapCut, and Spotify. These would allow us to run several operations on the text and test the corpora for different use cases, such as keyword searching, summarizing longer pieces of text, and transforming the text into project requirements. The length of the descriptions varied from 376 to 2060 words.

Let’s take a look at what it looked like. Here is the fragment of a TikTok description:

The text sample consisted of 1609 words and 2182 tokens, which – depending on the chosen GPT-3 model – should cost:

| Ada | Babbage | Curie | Davinci |

| $0,0009 | $0,0011 | $0,0044 | $0,0437 |

We did the same with each of the ten app descriptions in our corpora.

This was our reference for the actual tests with GPT-3 API.

Step 2: Preparing the prompts

As a next step, we prepared the prompts. For the purposes of this experiment, we wanted to use three prompts for three different use cases.

Read also: Top generative AI marketing tools: how they impact marketers’ work?

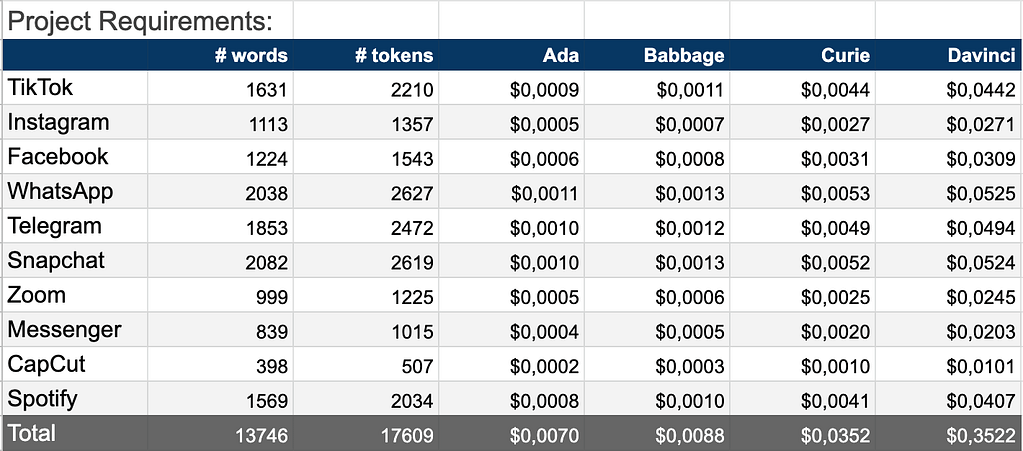

Prompt #1: Gathering project requirements with GPT-3

The first prompt was about gathering project requirements based on the given app description.

Describe in detail, using points and bullet points, requirements strictly related to the project of an application similar to the below description:

Our prompt was 22 words (148 characters) long, which equaled 26 Tokens. We added these values to the corpora and calculated the estimated token usage again for each model.

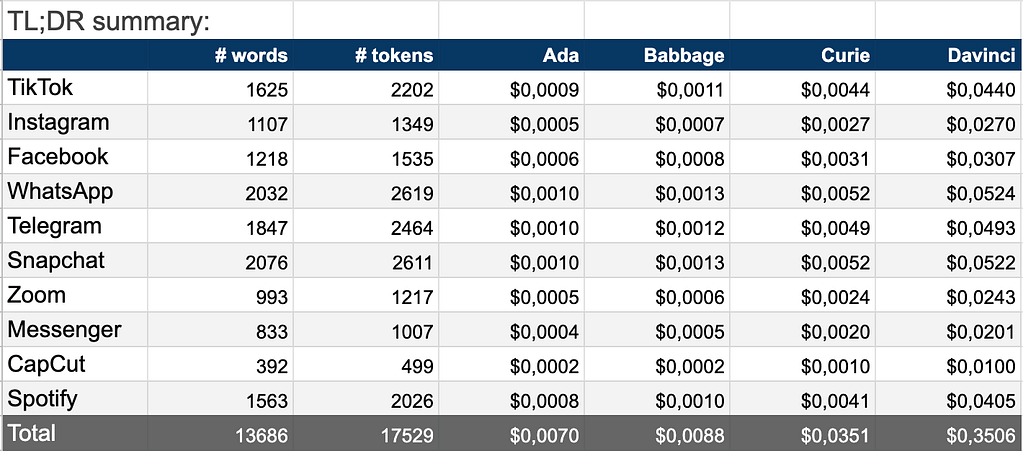

Prompt #2: Writing a TL;DR summary with GPT-3

The second prompt was about writing summaries of long fragments of text. The model’s “job” would be to identify the most important parts of the text and write a concise recap.

Create a short summary consisting of one paragraph containing the main takeaways of the below text:

Our prompt was 16 words (99 characters) long, which equaled 18 Tokens. Again, we added these values to the corpora.

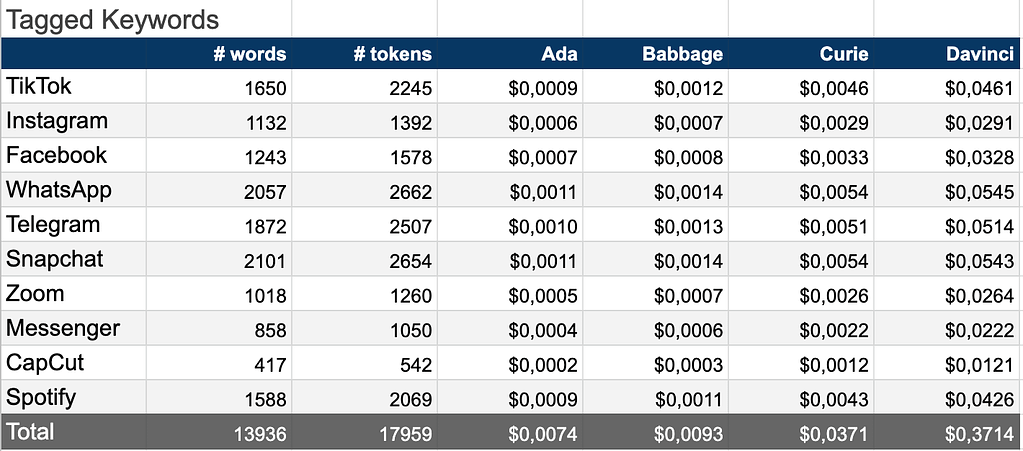

Prompt #3: Extracting keywords with GPT-3

The last prompt was supposed to find and categorize the keywords from the text and then present them in a certain form.

Parse the below content in search of keywords. Keywords should be short and concise. Assign each keyword a generic category, like a date, person, place, number, value, country, city, day, year, etc. Present it as a list of categories: keyword pairs.

It was 41 words (250 characters) long, which equaled 61 tokens. Together with the corpora text, it gave us:

The next step was supposed to finally give us some answers. We were going to send our prompts with corpora texts to API, calculate the number of tokens returned in output, and monitor our API requests in the usage view.

From generating content, analyzing customer data, to developing advanced conversational AI systems. See how GPT-3 can help your business grow.

Step 3: GPT-3 API testing

At this stage, we decided to focus only on the most advanced GPT model: Davinci – the one which is the core of ChatGPT.

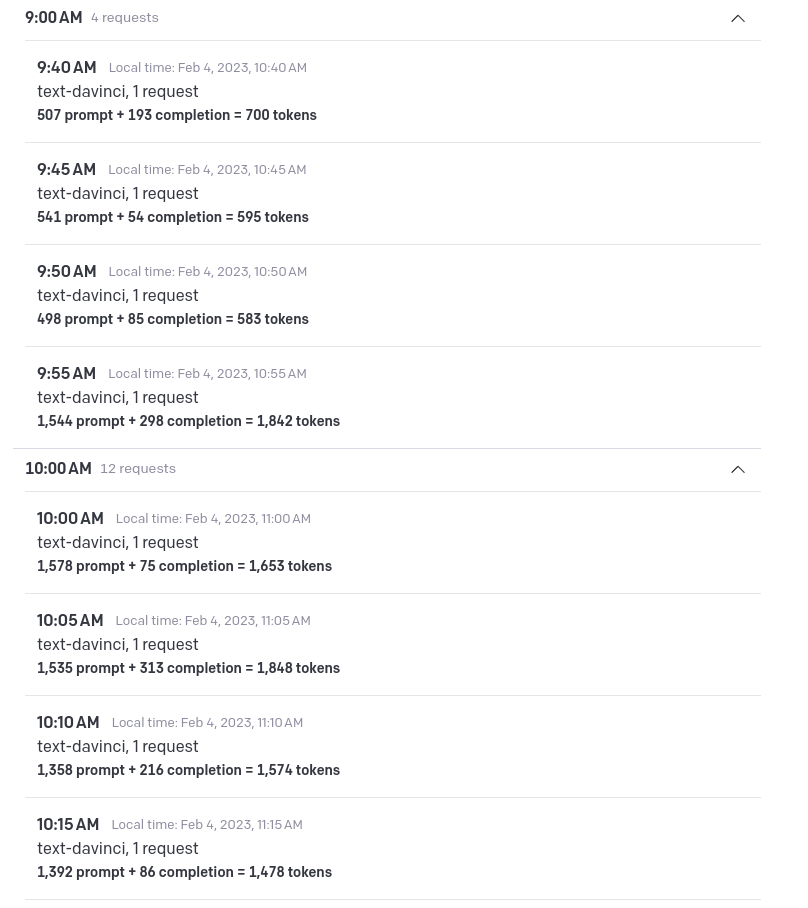

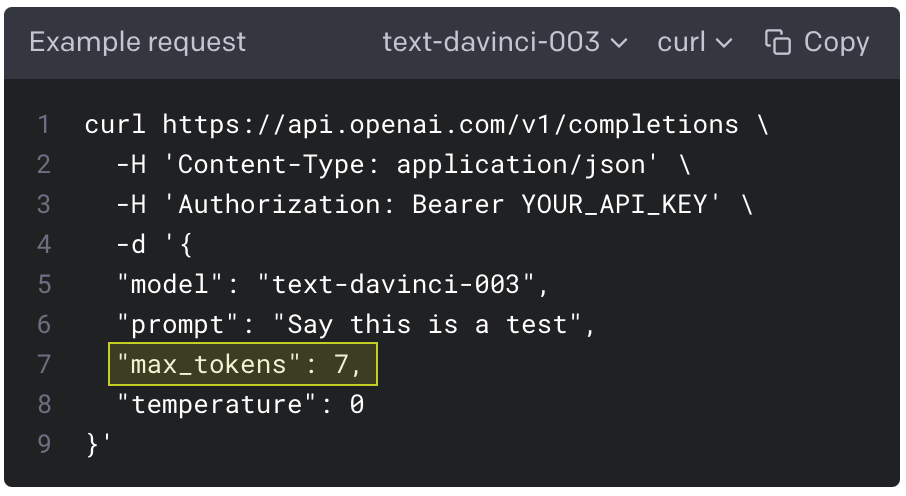

As the token usage on the OpenAI platform is measured in 5-minute timeframes, our script was sending only one API request every 5 minutes. Each request was a combination of one piece of text (corpora) and one prompt. That way, we could get precise information about the token usage for every combination and compare the results with the estimates. In total, we had 30 combinations to test: 3 prompts x 10 app descriptions. For the sake of this experiment, we didn’t add any additional variables in model settings, such as the model’s temperature, as it would significantly increase the number of combinations and the experiment’s cost.

After sending these 30 requests, we compared the results shown in the Usage view with the ones taken directly from the metadata of our API calls.

The results were coherent with each other. Moreover, the token usage of the prompts – including both the prompt and the corpora – was also coherent with the usage estimated earlier with the Tokenizer.

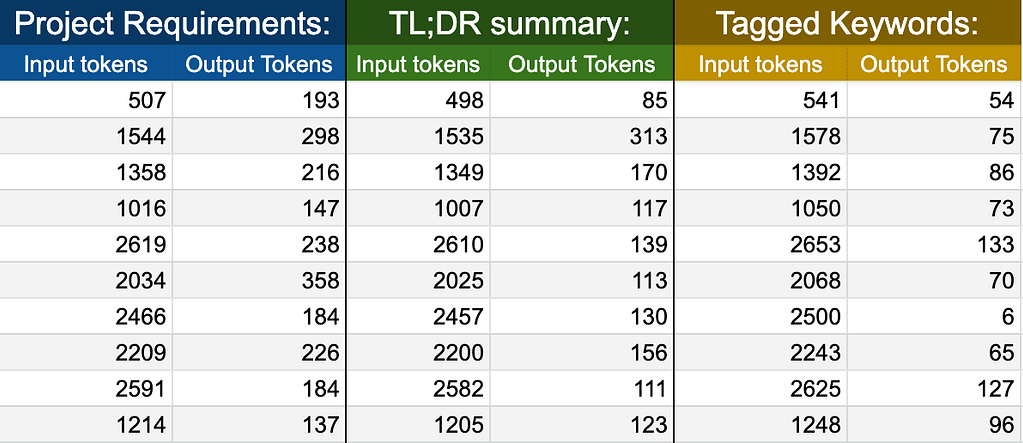

At this point, we knew that we were able to estimate the token usage of the input with high accuracy. The next step was to check if there was any correlation between the length of the input and the length of the output and find out if we are able to estimate the token usage of the output.

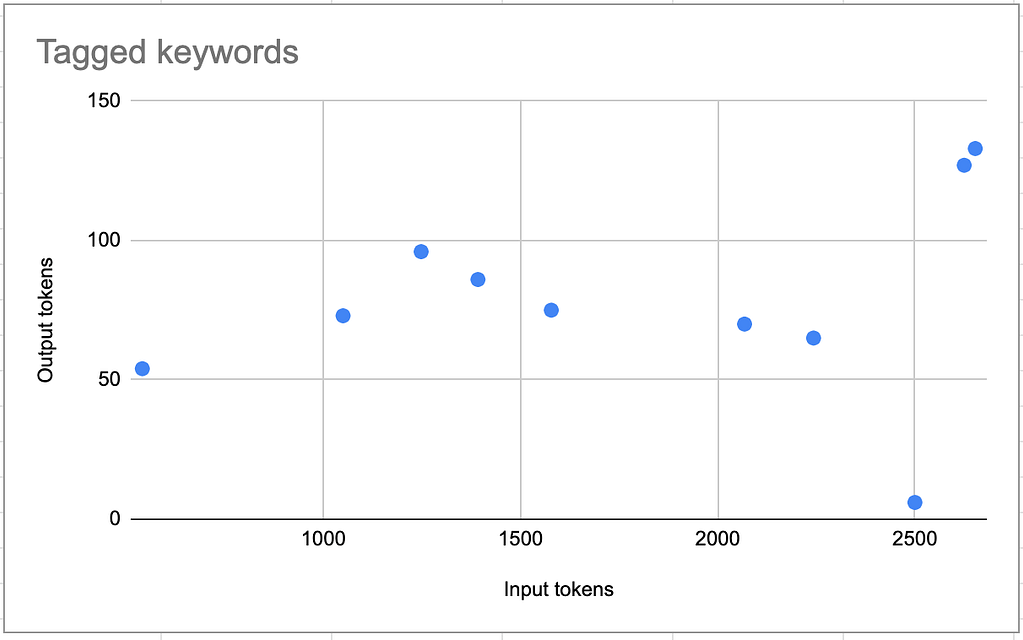

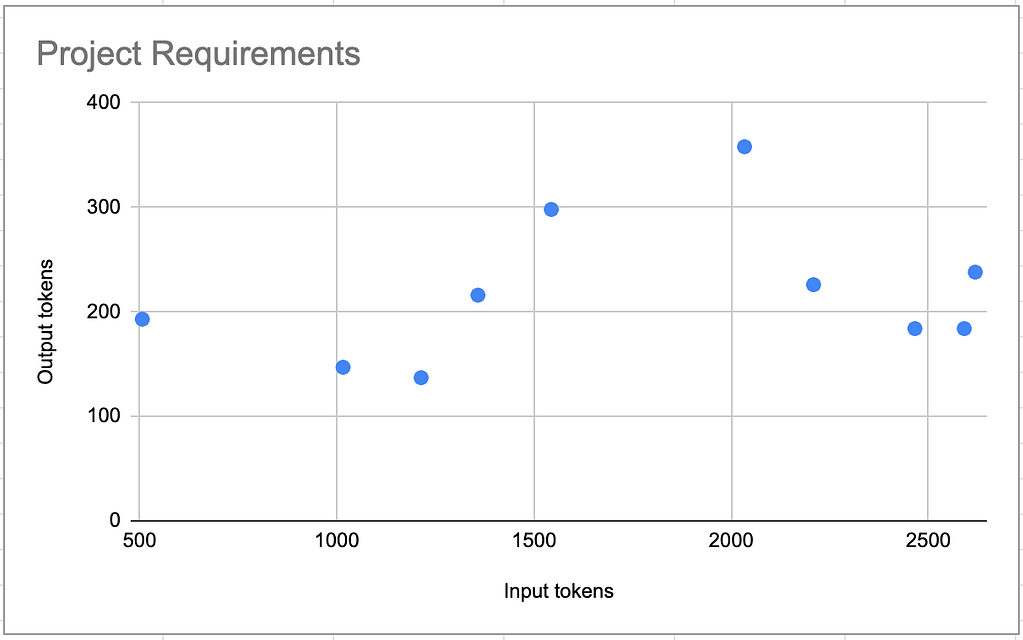

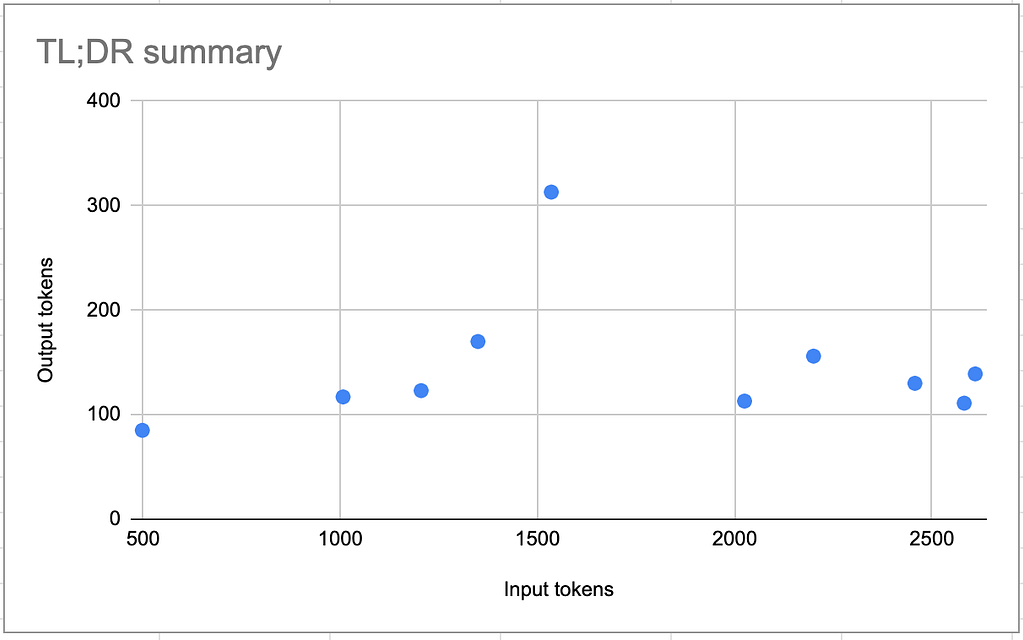

The correlation between the number of input tokens and the number of output tokens was very weak*. Measuring the number of input tokens was not enough to estimate the total number of tokens used in a single request.

* The slope varied between 0,0029 in the TL;DR summary and 0,0246 in the project requirements request.

What factors impact the cost of using GPT-3?

While there was no clear correlation between the number of input tokens (prompt + corpora) and the number of output tokens (response), we could clearly see that the factor that actually impacted the number of output tokens was the prompt itself – the instruction that was given to a model. In all of the analyzed cases, it took more tokens to generate project requirements than to extract and group keywords. However, the differences in these cases were rather small and did not really impact the cost of a single request, which was ~$0.04. It would probably change if the prompt required GPT-3 model to create a longer piece of text (e.g. a blog article) based on a brief.

Apart from the specific use case (what we use the model for), there are also other factors that can impact the cost of GPT integration in your project. Among others, these would be:

Model’s temperature

The temperature parameter controls the randomness of the model’s outputs, and setting it to a higher value can result in more diverse and unpredictable outputs. This can increase the computational resources required to run the model and therefore affect the cost.

Quality of prompt

A good prompt will minimize the risk of receiving the wrong response.

Availability

The cost of using GPT-3 may also be impacted by the availability of the model. If demand for the model is high, the cost may increase due to limited availability.

Customization

The cost of using GPT-3 can also be influenced by the level of customization required. If you need specific functionality, additional development work may be required, which can increase the cost.

As a user, you are able to control the budget by setting soft and hard limits. With a soft limit, you will receive an email alert once you pass a certain usage threshold, and a hard limit will simply reject any subsequent API requests once it’s reached. It is also possible to set the max_tokens parameter in the request.

However, you need to keep in mind that the limits you set will have an impact on the efficiency of the model. If the limits are too low, API requests simply won’t be sent, so you – and your users – won’t get any response.

How to estimate the cost of using GPT-3? OpenAI pricing simulation

The experiment has shown that it is very difficult to provide precise estimates of token usage based only on the corpora and prompts. The cost of using GPT-3 can be influenced by a wide range of factors, including the specific use case, the quality of the prompt, the level of customization, the volume of API calls, and the computational resources required to run the model. Based on the conducted experiment, we can roughly estimate the cost of using GPT-3 only for certain use cases, such as keyword extraction, gathering project requirements, or writing summaries.

Cost of using GPT-3 – project simulation

Let’s take a look at the first case and assume that you have a customer service chatbot on your website, and you would like to know what the users usually ask about. To get such insights, you need to:

- analyze all the messages they send,

- extract the entities (e.g. product names, product categories),

- and assign each an appropriate label.

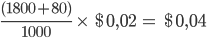

You have ~15.000 visitors per month, and every visitor sends 3 requests twice a week. In this scenario, we have 360K requests per month. If we take the average length of the input and output from the experiment (~1800 and 80 tokens) as representative values, we can easily count the price of one request.

The cost of using GPT-3 (Davinci model) in the analyzed case would be ~$14,4K per month.

It’s important to note, however, that it is only a simplified simulation, and its results are not fully representative. As the actual cost of building any GPT-3-powered product depends on multiple factors (the complexity of the project, the amount and quality of data, prompts, model settings, number of users), the safe margin of error of such an estimate would be even 50-100%. To get more reliable estimates, it would be useful to run a proof of concept project and test different scenarios on the specific set of data – your own corpora samples.

How much does it cost to use GPT? Summary

GPT-3 is a relatively new technology, and there are still many unknowns related to its commercial use. The cost of using it is one of them. While it is possible to measure the token usage and its price on the input side ($0.04 per 1000 tokens in the most advanced Davinci model), it is hard to predict these values in the output. There are many variables that impact them, and the correlation between input and output is rather low.

Because of that, any “raw” estimates are pure guesswork. To increase the accuracy of an estimate (but also to validate the feasibility of using GPT-3 in a certain use case), it is necessary to run a proof of concept. In such a PoC, we take sample corpora and test the model with different prompts and different model settings to find the best combination.

Build your Proof-of-Concept with GPT

Test GPT models for your specific use case. Reduce the risks and uncertainties and increase the chances of success.

Complete the form below, and let’s talk!

BONUS: How much does it cost to use GPT-3.5 turbo with OpenAI Foundry?

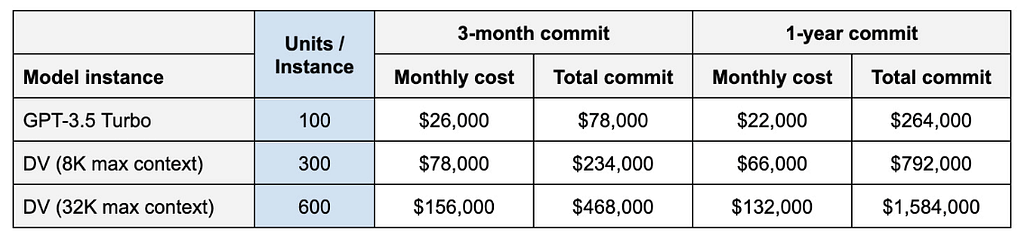

On February 21, the information about the new OpenAI offering called Foundry went viral, making its way from Twitter up to the most recognized tech media, such as Techcrunch and CMS Wire. According to the product brief, running a lightweight version of GPT-3.5 will cost $78,000 for a three-month commitment or $264,000 over a one-year commitment. Running the most advanced version of the Davinci model (with a token limit exceeding the ones we had in GPT-3 by 8 times!) will cost $468,000 for a three-month commitment or $1,584,000 over a one-year commitment.

But what is this all about? As we can read on Techcrunch:

If the screenshots are to be believed, Foundry — whenever it launches — will deliver a “static allocation” of compute capacity (…) dedicated to a single customer. (…)

Foundry will also offer service-level commitments for instance uptime and on-calendar engineering support. Rentals will be based on dedicated compute units with three-month or one-year commitments; running an individual model instance will require a specific number of compute units.

It seems, though, that the service-level commitment should not be treated as a fixed-price contract. For now, it would be safe to assume that the price covers only access to a certain model on the dedicated capacity “with full control over the model configuration and performance profile”, as we can read on the product brief screenshots.

The prices of tokens in the new models were not announced in the product brief. In the official OpenAI documentation update from March 1, though, we could read that the GPT-3.5 Turbo comes at 1/10th of the cost of the GPT-3 Davinci model – which gives us $0,002 per 1k tokens in GPT-3.5 Turbo.

In the analyzed case of having a customer service chatbot on your website, with ~15.000 visitors per month, each sending 3 requests twice a week, the estimated price of using GPT-3.5-Turbo would be not ~$14.4K but ~$1.44K.

The next update from March 14 revealed the pricing for the two remaining models – now officially named GPT-4.

The GPT-4 with an 8K context window (about 13 pages of text) will cost $0.03 per 1K prompt tokens, and $0.06 per 1K completion tokens. The GPT-4-32k with a 32K context window (about 52 pages of text) will cost $0.06 per 1K prompt tokens, and $0.12 per 1K completion tokens.

As you can see, there is a significant difference in the pricing model compared to the older versions of the model. While GPT-3 and GPT-3.5 models had a fixed price per 1K tokens, in GPT-4 we will need to distinguish the cost of the prompt tokens and the completion (output) tokens. If we applied it to the previously analyzed scenario (360K requests per month, each consisting of 1800 prompt tokens and 80 completion tokens), we would get the total cost of using GPT-4:

- $21.2K with the 8K context window,

- $42.3K with the 32K context window.

While this cost is much higher than with the text-davinci-003 model (not to mention the gpt-3.5-turbo!), we need to keep in mind that use cases for each version of the GPT models will be different. Which brings us back to the point where any “raw” estimates are pure guesswork and to increase the accuracy of an estimate (but also to validate the feasibility of using certain model in a certain use case), it is necessary to run a proof of concept.