On February 21, the information about the new OpenAI offering called Foundry went viral, making its way from Twitter up to the most recognized tech media, such as Techcrunch and CMS Wire. Even though it wasn’t officially confirmed yet, it is already widely discussed. What’s the whole fuss about? Let’s find out!

What is OpenAI Foundry?

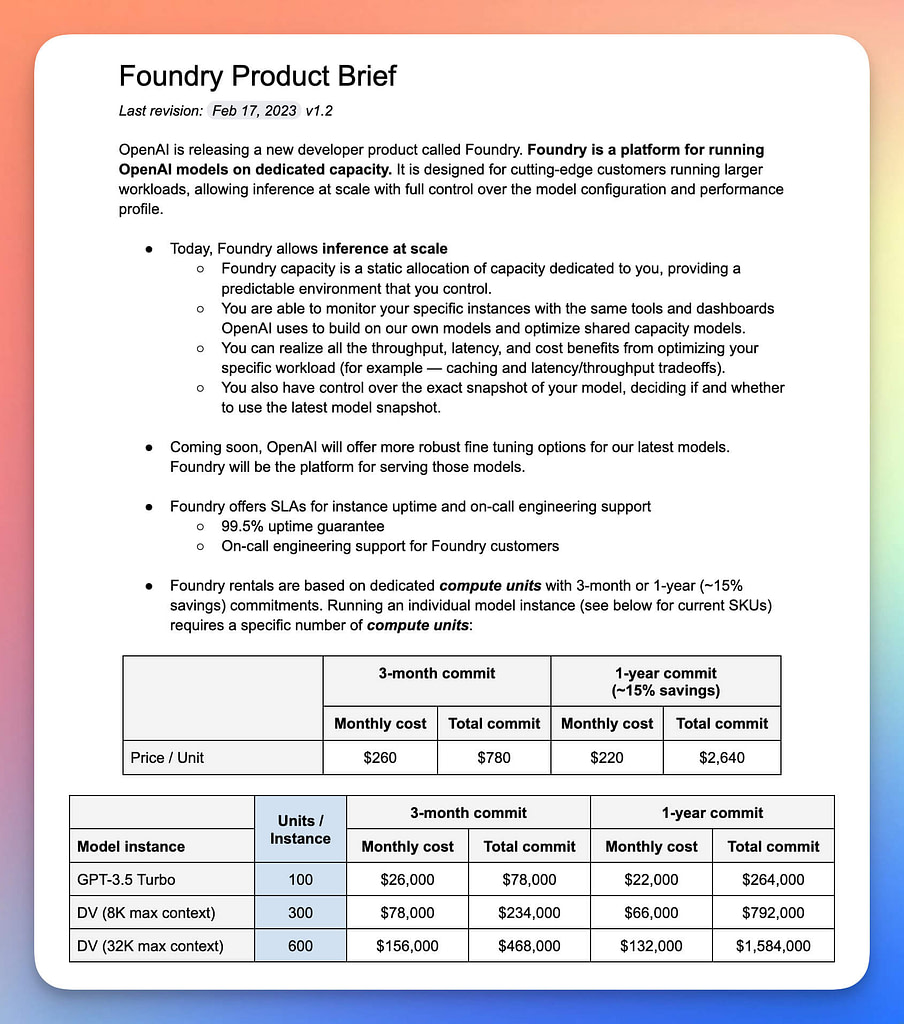

As we can read on the Foundry Product Brief screenshots that were posted on Twitter, Foundry is a platform for running OpenAI models on a dedicated capacity. It is promised to allow users to process larger workloads than the previous GPT models, with full control over the model configuration and performance profile.

From what we can see in the screenshots (if they are to be believed), Foundry will offer its customers a fixed amount of compute capacity. As we can read on Techcrunch:

Users will be able to monitor specific instances with the same tools and dashboards that OpenAI uses to build and optimize models. In addition, Foundry will provide some level of version control, letting customers decide whether or not to upgrade to newer model releases, as well as “more robust” fine-tuning for OpenAI’s latest models.

In addition to these features, Foundry will also provide service-level agreements for uptime and engineering support during regular business hours. Customers will be charged based on the number of dedicated compute units required to run their model instances for either a three-month or one-year commitment.

Foundry is supposed to come with three model instances:

- GPT-3.5 Turbo

- OpenAI DV (8K max context)

- OpenAI DV (32K max context)

The first model instance, the GPT-3.5 Turbo, was already released on March 1, 2023. It’s the same model that is used in the famous ChatGPT, and it’s still being developed. As we can read in the official product update, the new stable release is planned for April.

The other models that are listed in the discussed product brief are believed to be long-awaited GPT-4.

Are OpenAI Foundry and GPT-4 the same?

While there are indeed some strong premises that the DV models offered for the Foundry users are indeed the GPT-4, it’s important to understand that OpenAI Foundry is NOT the GPT-4. OpenAI Foundry is a platform for running OpenAI models on a dedicated capacity. GPT-4 is the name of the set of models that will probably run on the Foundry platform.

On March 1, 2023, The GPT-4 release date is not known yet, and the same applies to any details of the GPT-4 models capacity. Just like with any information about the unreleased products of OpenAI, there are still more rumors than facts. One day, a visual comparing the number of parameters used by GPT-3 and GPT-4 goes viral; the next day, we hear Sam Altman, OpenAI’s CEO, saying:

The GPT-4 rumor mill is a ridiculous thing. I don’t know where it all comes from. People are begging to be disappointed and they will be. The hype is just like… We don’t have an actual AGI and that’s sort of what’s expected of us.

In short, GPT-4 will be an evolution, not a revolution. GPT-4 won’t be much bigger than GPT-3. According to Michael Spencer, we can assume that it will have around 175B-280B parameters.

#GPT4 won’t actually be that much bigger than #GPT3

Twitter remains very prone to misinformation. pic.twitter.com/hX3A0IdLT6

— AI Supremacy (@AISupremacyNews) February 27, 2023

It is still unclear whether GPT-4 models will be available only for the Foundry users or all OpenAI users, just like the previous models.

UPDATE (March 15, 2023): Yesterday, OpenAI has officially announced the GPT-4. While the details of how GPT-4 is different from GPT-3 is a material for a completely new article, it’s important to note that the new models will be available outside the Foundry platform and will have larger context windows than the GPT-3 and the GPT-3.5 models.

Read also: Open-source vs. OpenAI. 8 best open-source alternatives to GPT

How is OpenAI Foundry different from GPT-3?

Now that you understand what OpenAI Foundry is, let’s see what it actually means for OpenAI users.

Higher token limits for Foundry users

Compared to what was (and still is) possible with the GPT-3 models, the model instances that are purportedly planned to come as a part of Foundry offer much higher token limits per request. Depending on the chosen model, it should be possible to use up to 8 times more tokens per request than in GPT-3.

While the token limits in GPT-3 allowed users to process ~3000 English words in a single request (which translates into ~6 single-spaced pages of text), the new models allow for processing as much as ~24000 words (which is ~48 pages) in one request. More context could enable new possible use cases and allow us to scale AI’s capacity – we’ll get to that later.

Dedicated servers

With a dedicated capacity to run OpenAI models, users will get more control over model configuration and performance profile. Even though no details have been revealed so far, we can expect that users will be able to adjust various model parameters and optimize model usage for certain use cases, focusing on the specific metrics – may it be the model’s accuracy, speed, or token usage.

Thanks to having access to dedicated computing power, Foundry users will have exclusive access to computing resources that are specifically allocated to running models. The models will run smoothly and efficiently without being slowed down by other applications running on the same computing resources, with a guarantee of the required computing power availability.

Dedicated computing power should also solve the problem of token usage limits. Right now, the GPT models are restricted by the limited number of tokens per request and per specific timeframe. Getting past these limits may significantly increase the capacity of the models in their commercial use.

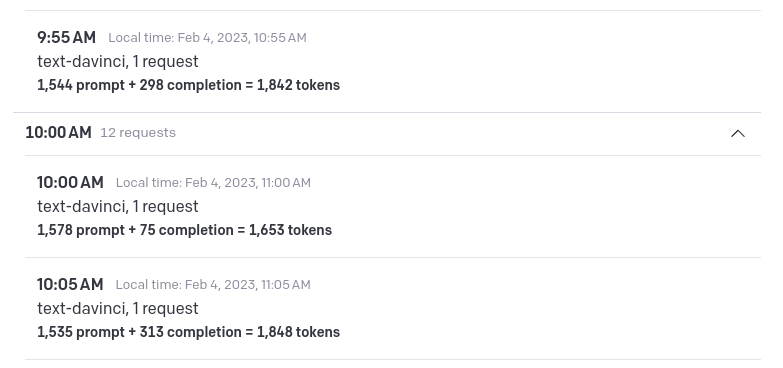

Analytics

With the current GPT-3 models, the analytics dashboard is very limited. Basically, the users can see the billing information and the summary of the token usage grouped in 5-minute timeframes. If the screenshots are to be believed, Foundry users will be able to monitor the specific instances with the same tools and dashboards that OpenAI uses to build and optimize models – which can lead to “more robust” fine-tuning of the models.

Higher cost of OpenAI Foundry

All that will come at a price. Even if we assume that’s just smart priming and the actual cost will be lower than presented in the product brief, it’s still an additional cost to the cost of the token usage itself – and it’s not low.

How much will it cost to use OpenAI Foundry?

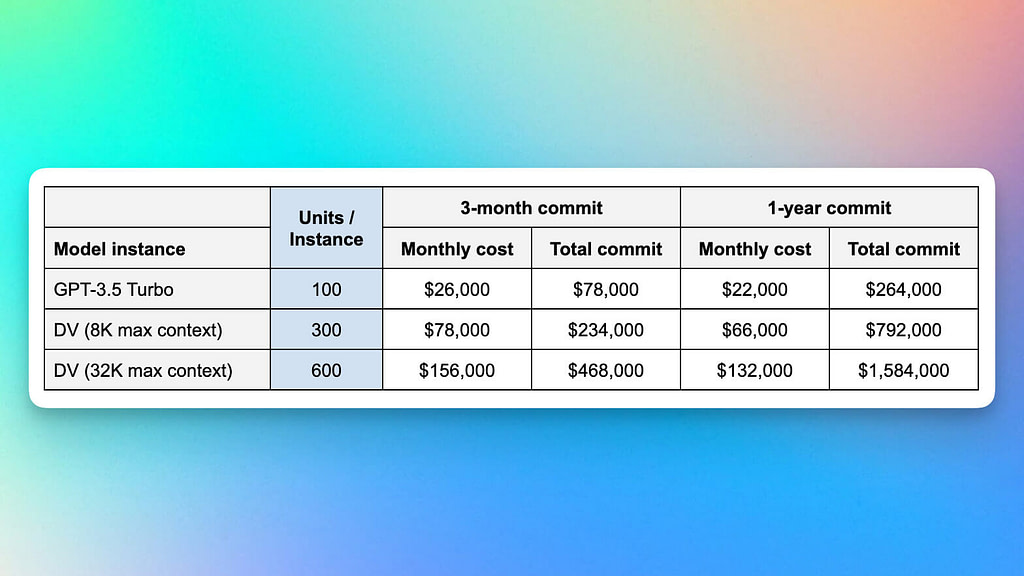

According to the product brief, running an individual model instance will require a specific number of compute units:

- GPT-3.5 Turbo – 100 units

- DV (8K max context) – 300 units

- DV (32K max context) – 600 units

Gunning GPT-3.5 will cost $78,000 for a three-month commitment or $264,000 over a one-year commitment. Running the most advanced DV model (with a token limit exceeding the ones we had in GPT-3 by 8 times!) will cost $468,000 for a three-month commitment or $1,584,000 over a one-year commitment. That’s for the compute units.

Additionally, users will probably still need to pay for the actual token usage. How much? It is hard to say at this point. The prices of tokens in the DV models were not announced yet. In the recent OpenAI documentation update, though, we can read that the GPT-3.5 Turbo comes at 1/10th of the cost of the GPT-3 Davinci model – which gives us $0,002 per 1k tokens in GPT-3.5 Turbo.

As you can see, there are still many unknowns, but we keep an eye on it. One thing is sure: using Foundry won’t be cheap.

Sign up to receive the news about Foundry, GPT, and generative AI.

Use cases of OpenAI Foundry

More tokens per request mean more possibilities than in GPT-3 use cases.

As the largest DV model is expected to have eight times the context length of OpenAI’s current GPT models – which would be ~50 pages of text in a single request! – it may significantly increase the number of applications for generative AI.

We asked ChatGPT to conduct a thought experiment and come up with possible use cases of GPT models with the increased token usage limit. According to what it returned, such a model could be able to:

- read entire scientific articles,

- prepare executive summaries of studies and business reports,

- improve the accuracy and fluency of machine-translated text,

- generate more complex and engaging dialogue in conversational agents or chatbots, answer more complex questions requiring longer and more detailed responses,

- write entire articles,

- create comprehensive and accurate knowledge graphs,

- classify longer pieces of text into specific categories or topics,

- processes large volumes of data to identify and extract entities, such as people, places, and organizations,

- analyze the sentiment of longer pieces of text, such as product reviews or social media posts.

The list could go on and on. The key point is that what wasn’t possible with GPT-3 due to its limits per request should soon be possible with the new models and the Foundry platform.

Read also: Top generative AI marketing tools: how they impact marketers’ work?

Who will use OpenAI Foundry? Possibilities vs. reality

The potential of using OpenAI Foundry and its new GPT models is huge. However, we need to remember that if the information presented on the screenshots is valid, it would mean that it’s an offer for the biggest players who can afford to spend $260K-$1.5M a year only on access to the platform.

Who will benefit from using OpenAI Foundry in the first place?

- Technology companies that offer natural language processing products or services, such as chatbots, virtual assistants, or machine translation systems, as they may require advanced GPT models to improve the accuracy and functionality of their products.

- Academic institutions or research labs that may use advanced GPT models for natural language processing research, including language modeling, sentiment analysis, or text classification.

- Financial institutions that will benefit mainly from using advanced GPT models for automated trading, risk assessment, or fraud detection.

- Government agencies using the platform to boost the effectiveness of the legislative processes, e.g., by improving document classification and summarization for legal documents and regulations.

- Or any other company or organization that meets two crucial conditions: having access to large volumes of data and a secured budget for such a project.

It may be important, though, to separate Foundry from GPT-4. It is still not clear whether the most powerful models will be available outside Foundry or not. GPT-3.5-Turbo is. If the other two models follow the same path, it will be a huge step toward democratizing AI as a new technology platform.

What’s the expected OpenAI Foundry release date?

Since the release of ChatGPT in November 2022, OpenAI and their GPT models have been on the spot. Official news and unconfirmed leaks, facts and opinions, announcements and suppositions – every piece of information, true or fake, has the potential to go viral.

As of today, March 9, 2023, OpenAI Foundry is still just a rumor. Widely discussed, impatiently awaited, yet not officially confirmed by OpenAI. Once it is launched, it may revolutionize the way the corporate world uses AI. The date of this release, however, is still unknown – it is as likely to happen next week as in 3 months. If you want to ensure you don’t miss it and get a chance to adopt OpenAI Foundry before your competitors do, fill out the form below.

Sign up to receive the news about Foundry, GPT, and generative AI.