Motivation

When dealing with microservices, one very important question is: How will you handle inter-service communication? We asked ourselves this question a few years back, and the initial answer was any developer’s favorite: That depends. As bad as it may sound, it’s actually pretty clear once you think about it. This article presents a case study of using Message Brokers with microservices.

Synchronous vs. asynchronous communication

There are two basic cases of communication: you either need data from another service to proceed with your request, or you would like to inform others about your data or an event that just happened. The first case is simple – a client makes a request and waits until he gets a response.

Let’s make an example based on a pizza house application. The core functionality is, of course, to order a pizza. In order to achieve that, we make a request describing the pizza we would like to have and send it to a component responsible for taking orders. After that, we expect an immediate response with information on:

- Whether the order is accepted,

- What the price is,

- What the expected delivery time is.

This first step shows the fundamental rule of synchronous communication – the client waits until he gets a response.

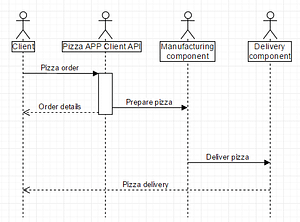

But it’s not the end of the job for our application. We still have to make the pizza and deliver it to the customer. From the application perspective, we have to pass the request to a manufacturing component, which will prepare the pizza. The problem is that producing pizza takes a considerable amount of time, and we have more clients to serve. This means that we cannot just wait for the pizza and block everything else. The solution is to use an asynchronous communication model. The only work to do is to send information to the proper component, simply forget about the order and start serving another client. When the manufacturing component is available, it will get our order and produce pizza. After it’s done, it will send another notification which will be handled by the delivery component as soon as possible. With such architecture, every component is able to work without unnecessary breaks, without bothering if other components are busy or not, and pizza will be delivered in optimal time. The entire process is modeled in the diagram below:

Communication from the client to the application is synchronous,

but then the messages inside the app are sent asynchronously.

Message queuing services

In our pizza application, there is only one tricky question: How to design such asynchronous communication? Let’s take a closer look at the requirements:

- We have to be able to send a message no matter if someone else is able to handle it immediately,

- We should be able to send multiple messages and they should be handled in FIFO order,

- Messages can wait until they are taken,

- Messages cannot disappear,

- Each message should be handled only once,

- We shouldn’t be forced to know who will handle the request,

- We should be able to check all waiting messages

- We should be able to easily scale senders or receivers.

There are quite a lot of them and they are non-trivial. Fortunately, message brokers are here to help us.

After the research, we came up with a short list of candidates. It includes ActiveMQ, RabbitMQ, ØMQ and Apache Kafka.

The first two are ‘first class citizen’ enterprise Message Queues with built-in queues, topics, publish-subscribe model, support for persistence, web panels with monitoring tools, scalability options, and many other features that we highly appreciate. On the other hand, there are ØMQ and Apache Kafka, which clearly outperform all other frameworks, but they lack the broker infrastructure and monitoring tools, so they are rather well suited in broker-less designs than in the microservices environment.

After a tough debate, we determined that we are looking for a general solution to commonly used cases in different situations. We usually don’t have very restrictive performance requirements. Despite ØMQ and Apache Kafka performance values, we would like to omit the overhead for implementing messaging infrastructure if we can have it already implemented. The decision was to use either ActiveMQ or RabbitMQ with horizontal scaling capabilities.

ActiveMQ vs. RabbitMQ

Two big open-source players were left, with an impressive list of messaging capabilities. It was a hard task to determine which one we should use, but a comparison makes things a bit easier. Below, there is a list of features and characteristics of both brokers.

| Feature/characteristic | ActiveMQ (Apache) | RabbitMQ (Pivotal) |

|---|---|---|

| Implementation language | Java | Erlang |

| Main focus | follow JMS standard (API) protocol as an implementation detail |

follow AMQP protocol, does not implement JMS in the base version |

| JMS | 2.0 and 1.1 | commercial plugin for 1.1 |

| AMQP* | 1.0 | 0.9.1 (1.0 via plugin) |

| Secondary protocols | STOMP, MQTT, MSMQ, WCF | STOMP, MQTT |

| Key elements | Queues, topics | Exchanges, bindings, routing keys, queues |

| Web panel | yes (no monitoring, administration options) |

yes |

| Direct queue model | yes | yes |

| Publish/subscribe model | yes | yes |

| Persistence | yes (KahaDB) | yes (Mnesia + custom file format) |

| Scalability | yes | yes |

| Guaranteed delivery | yes | yes |

| Clients for most common languages (C++, Java, .Net, Python, Php, Ruby, …) |

yes | yes |

| Broker architecture | central broker or p2p | central broker |

| EIP, camel integration | yes | yes |

| Support | active | active |

*AMQP 0.9.1 and 1.0 should be rather treated like different protocols, than an upgrade. RabbitMQ’s Simon

MacMullen said: it’s such a big difference from 0-9-1 that I view it as a different protocol.

Feature-wise, they are quite similar, but there were a few things that convinced us to try ActiveMQ.

- Easy to use in integration tests – ActiveMQ is written in Java – which we use the most. It’s trivial to use embedded broker mode in tests and simulate real queues. Since RabbitMQ is written in Erlang, you cannot embed it and run it on jvm.

- JMS standard – we have some experience with JMS and we always prefer to use standards.

Read also: Introduction to Java REST API testing

ActiveMQ in practice

Introducing Message Brokers to our projects was a great decision. Communication and synchronization problems disappeared. It’s trivial to publish some information to other services. We get a lot of feedback from developers on how good the idea was and how well it fits in microservices. However, we also received some negative comments about ActiveMQ itself. Some of the major concerns were: monitoring and administration via web panel were annoying, our implementation of publishers/subscribers was not ideal, and the documentation is not perfect. But those were subjective opinions rather than real problems. But then we came across an unexpected issue. It turns out that it doesn’t support load balancing of messages in publish-subscribe model OOTB. From the documentation:

“A JMS durable subscriber MessageConsumer is created with a unique JMS clientID and durable subscriber name. To be JMS compliant only one JMS connection can be active at any point in time for one JMS clientID, and only one consumer can be active for a clientID and subscriber name. i.e., only one thread can be actively consuming from a given logical topic subscriber. This means we cannot implement

- load balancing of messages.

- fast failover of the subscriber if that one process running that one consumer thread dies.“

Shortly after that paragraph, they describe “Virtual Topics” as a workaround for that problem. It works and the problem is solved, however, we don’t like to use such techniques, especially since the main concept of our apps is that they should be easily scalable (which means we would have to configure virtual topics everywhere).

Spring and RabbitMQ to the rescue

At the same time, we decided to change our technology stack and adopted Spring Boot (more on that in the blog post: ‘get-along-with-your-spring-boot-starter’). We quickly realized that it’s a nice opportunity (and probably the only one) to change our Messaging Provider. The candidate was trivial – RabbitMQ, especially since Spring Framework and RabbitMQ are created by the same company – Pivotal.

After a while, we prepared a set of starters that extend Spring Boot (https://github.com/neotericeu/neo-starters/), with neo-starter-rabbitmq among them. The spring support for RabbitMQ is great, but we wanted to extend it with additional features (check wiki from our repo for details). It turned out great. We get all the features that we have in ActiveMQ and we gain some new ones, like:

- load balancing between subscribers,

- subscriber failover,

- great administration/monitoring web panel,

- better documentation,

- data types can be directly transferred over queue without manual serialization/deserialization,

- routing key patterns.

There were also downsides:

- worse redelivery mechanism (blocks subscriber) – requires enhancements,

- integration test support – for simple tests, we can always unit test producers/subscribers separately and use mocks for integration tests, however, we would also like to test real queues and in order to do so, we will have to integrate Apache Qpid.

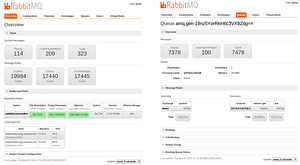

We are using Spring Stack with RabbitMQ for new apps and trying to migrate old apps to RabbitMQ if needed. It looks promising so far, and it’s much easier to handle queues. We also have a lot more information about queues, messages and connections. Web panel example:

So far we’ve covered motivation and general purposes of using messaging systems, as well as reasons why we switched from ActiveMQ to RabbitMQ. We are now ready to go into technical details about the messaging contract, queue architecture patterns, ways of handling the flow of messages, dead letters and many other interesting features. Stay tuned!