What does the artificial intelligence implementation process look like?

Artificial intelligence is a cutting-edge solution for a wide range of industries, including human resources and customer service in the broad sense. That’s not just a marriage of convenience – AI brings relief to a wide range of products and services. The constant growth and development of technologies necessitates incorporating new measures to stay ahead of the competition and succeed in your industry.

To start the AI transformation of your company, you can implement a small project working on a part of your data to address narrow goals in your business. Not sure which area you would like to assign to AI? You can start with chatbots, which are a part of the cognitive technology, using natural language conversations in app interaction. The rise of Siri, Cortana, and Alexa is a visible sign that the giants are incorporating AI through their tech stack. Deciding what solution will impact your business in the best possible way may be tough, but there’s a solution to such an issue as well. Our experts can help you decide which areas of your operations could benefit from AI enhancements and boost your results.

Although it may seem huge, this tech revolution is egalitarian and surely not reserved exclusively for the market giants. As complicated as it may seem, artificial intelligence is a way of extending the possibilities that traditional analytics give. It does so by running all possible combinations of predictive variables. Your data has a business potential and power that needs to be unlocked to make you benefit from it, the sooner, the better. If you ever found yourself in a situation of the cat (shown below) but wonder how it is done, this is the right read for you.

In this article, I’m briefly describing the process of artificial intelligence implementation into your operations.

Metrics

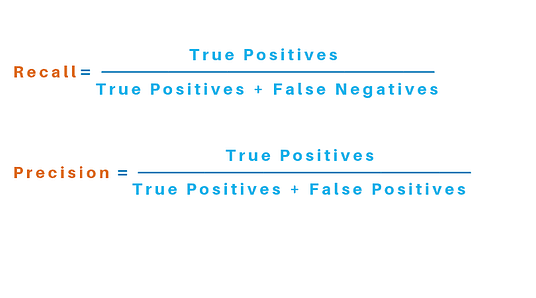

The first step in the AI implementation process requires determining the metrics impacting your business. At this point, we define what measures can be improved and prepare a list of metrics and the forecasts how we expect models to affect business in the manner of “improve metric X by Y%”. The metrics definition includes models classification, where precision (also known as the positive predictive value) expresses the proportion of data points marked as relevant in the model that are actually relevant, while recall shows all relevant instances in the dataset.

Requirements & data

The next step of the process includes the assessment of available datasets, where we determine:

- what data you will need for successful implementation,

- what data you have within your company,

- what data is available outside your company for free (i.e. weather data),

- what data is available outside your company commercially (and if using commercial data would bring enough return assuming business metric goals are met).

When it comes to the ability and difficulty to combine datasets, we determine the following aspects:

- whether you can get up-to-date info for each dataset and how often each dataset should be updated,

- how much time you need to prepare “excel like” table combining all the datasets.

Initially, we focus on datasets that are suitable for updates and combining, enabling “feeding” the model with data for scoring. Subsequently, we work on attributes that are considered “usage metrics” – ie. get the average number of offers opened monthly over the last year and calculate the difference between the average and last month.

This part of the implementation process helps to declare how often we need to generate scores (you will usually be fine with per day or per week scores instead of scores after every event) and update models via:

- generating batch predictions, which are generally much cheaper than transactional scoring but are not feasible if you need to update scores after each “event”,

- generating transactional scores, which are much more expensive but give you up-to-date info on every item being scored.

Furthermore, it also helps to determine whether you need to update models immediately with retraining APIs or manually create & operationalize new versions of the model (like it is usually done).

Next, we check if there are any pretrained models available for you to use or if there is a need to build a model yourself. Amazon Rekognition, Azure Cognitive Services and Google Vision/Speech APIs provide ready-to-use accurate models for OCR, image, speech and text analysis for a fraction of the cost and time to build them in-house, speeding up the time-to-market.

Read also: How to increase revenue with predictive analytics

The data preparation process

Building data models to harness the possibilities your resources hide will be a necessity in the near future. Organizing it also supports control of the possible data flood that gradually becomes a challenge in various industries. Artificial intelligence gives you both information and insight, but to enable the satisfying final result, you first need to provide it with information yourself. Data modeling is necessary to make your enterprise fully benefit from the amount of information your business obtains. What has to be prepared before deploying AI in your product and what can you do on your own in this matter?

The data you want to use in this process has to be selected and transformed into a format compatible for future use. Depending on the process scale, a certain number of records (e.g. 50.000 source documents divided into 5.000 categories) will be requested, all labeled with categories for the model to use. The data can be provided in various formats, including .txt, .zip, and other.

The data provided for the AI deploying process should be the best quality, as weak quality of input equals a weak output. And how to tell if your data is of good quality? Make the training data you “feed” to the machine be as adequate to the data AI will finally work on, including all kinds of different documents you process – considering their length, wording, style, content, and authors. Diversity boosts the learning process, but documents’ features enabling labeling need to be easily recognizable. To ensure efficient implementation in some cases, use documents that can be labeled by… a human. If a user won’t be able to properly label the document upon reading it, the machine won’t be able to do it either. Training models work best on common labels. This means that removing the lowest frequency scoring tags (labels) from your dataset improves the accuracy of the process. No matter how mundane this preparation may seem, at this step you are already aware of how AI will affect your business by making the data you gathered work for you and your goal.

Process

The training part starts with preparing the training data, meaning arranging the mechanisms combining records from multiple data sources into one. In batch scoring, mechanisms preparing DB and/or CSV files with all records integrated are built, while in transactional scoring, you will most probably have a DB with pre-integrated data and items updated after each event, resending the updated records for scoring.

Training the model makes your data available for the cloud engine that you plan to use for building models. Note that there are different models which serve different goals:

- The classification model is the one that answers yes/no questions or picks a choice from the available data of X, Y, Z.

- The regression models answer “how many/how much will it be?” questions.

- Clustering and anomaly detection models provide answers based on analyzing data for the most common or out-of-order values.

Model operationalization makes scores from the models available for people that would use it in your product (most often, developers), while publishing a model usually consists of attaching score to an individual or event, exposing API to score data (in batches or one by one), providing API where the data that updates models is sent to.

Finally, you can use the model APIs or scores in your product. Once you are in possession of scores, you can act on them. Typical use-cases are:

- telling users what the risk of event X happening (or not happening) is,

- predicting the price of certain goods you mean to acquire, especially useful in real estate,

- knowing which customer to discount.

The aftermath of Artificial intelligence implementation

Last but not least, the process needs a verification part that consists of the model check for accuracy/precision/recall and checking business metrics.

In business, AI applications can serve almost any role you would like them to, depending on your organizational needs and the business intelligence derivatives from acquired data. No matter your industry and the main field of expertise, AI can unlock the power of the data collected in your business.

The Gartner survey shows that so far, only a few percent of surveyed companies have already deployed AI in their operations. The race has just begun, as numerous enterprises are completing the preparation stages, so it definitely is the right time to think about how your data can work for your business’ success. Practical business applications of artificial intelligence development services present a wide array of possibilities and picking the right one might cause confusion. You can drive engagement in CRM, gather social data, optimize processes, and many more. The business value of AI-enhanced processes is the future disruption for enterprises and artificial intelligence implementation with the right partner is the key to success.

Want to ensure efficient and secure artificial intelligence implementation into your product? We’re here to help you!